Increasing the level of navigation autonomy in mobile robots creates tangible business benefits to those companies employing them. But designing an autonomous navigation system is no easy task.

San Diego-based Brain Corporation, which spoke at our inaugural Robotics Summit & Showcase, seems to have figured it out, however. The company raised $114 million in mid-2017, led by the Softbank Vision Fund, for its BrainOS platform that creates autonomous robots using off-the-shelf hardware and sensors.

Savioke recently announced it will integrate BrainOS into its commercial service robots. For years, Savioke built its autonomous navigation stack from scratch using ROS, but that will no longer be the case.

“We want to focus on areas where Savioke can be unique, not on areas where we don’t have a competitive advantage,” Savioke founder and CEO Steve Cousins recently told The Robot Report. “Brain Corp. is doing some interesting stuff that is potentially game-changing in terms of the cost. They’re able to command volume pricing on sensors that we can’t. And they’re engineering things to fit together nicely.”

Many robotics developers still want to develop their own autonomous navigation systems, of course. And Brain Corp. is well aware of that. To help them out, Brain Corp director of innovation Paul Behnke and VP of innovation Phil Duffy shared in a webinar “Robotics: The Decathlon of Startups” the following challenges you’ll encounter when designing an autonomous navigation system.

Getting the Software Right

“That’s definitely the first problem that comes to mind when you’re thinking about developing robotics for new environments,” Behnke said.

Getting the software right is especially challenging for robots that will be autonomously navigating in dynamic environments such as airports, malls, warehouses and other high-traffic workspaces. These areas often have tight spaces and continuously changing obstacles that require complex routes. The challenge is writing software that handles these issues with the end-user in mind.

The robot must still involve minimal training for operators, no environmental setup, single-shot learning by demonstration, and productivity reporting.

Gathering Enough Real-World Data

Variables that impact autonomous navigation are not limited to physical obstacles crowding a robot’s work environment. Feature-less environments, and even time of day, add complexity to autonomous navigation. Many of these types of hurdles are edge cases that do not present themselves until after software has been developed and the robots are tested in a live environment. Edge cases are the punch you don’t see coming. Behnke uses this example:

“Being able to navigate in a cluttered, dynamic environment with a lot of people moving around sounds difficult. But, an open gymnasium in a university is just as difficult because there’s a lack of features. There’s nothing, really, to anchor or tie into when you’re building your map.”

Success is contingent upon getting your robot, and the software that runs it, into many different environments early on. Functional autonomous navigation systems are not developed in a lab. Duffy said it’s fine to begin development there to create a demo, or to get funding. But those stages are the limit for a lab environment.

“Commercially, it won’t work until you’ve been in a number of scenarios because the problems that you’re going to experience in the wild cannot be replicated in a lab.”

You can’t solve or anticipate every edge case your robot will encounter in the real world. Keeping your customer’s employees involved in the installation process and giving them the tools to troubleshoot issues in real-time can improve your robot’s efficiency.

Duffy further used examples like a robot mistaking light from a reflective surface as a physical object or infrared heaters disrupting the robot’s path. Essentially, the more edge cases you can solve, the better your navigation solution. Data is king.

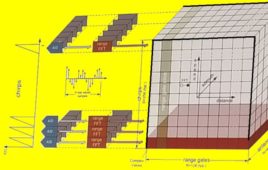

Creating Precision Motion Control

Duffy says the real key to designing a robot with autonomous navigation is creating a system that has precise and accurate motion control.

“For a lot of robots, you’re just taking a robot from point A to point B. With Brain Corporation, our initial market launch was these industrial floor care machines, and they need to drive as close to an edge, as close to a wall, as close to an obstacle as possible to maximize the amount of floor cleaning we provide.”

Highly accurate motion control is imperative if you want your robot to be able to handle complex, tight spaces. That’s something you can’t do with a robot that has much larger footprint. Designing a system that is as tight and accurate as possible give you much better capabilities to navigate complex spaces.

Brain Corp. shared this example that shows one of its machines navigating a tight space:

Reducing False Positives

Human detection is crucial for expanding end-user applications. If your robot can’t tell a person from a package on the floor, you’ve hamstrung your business before it starts.

“If you’re developing your own algorithms, if you are looking for navigation systems to use in your robotics project, then having a system that can recognize humans different to obstacles is essential. Unless you’re going to clear everybody out in the environment the robot works in, which limits the applications, you really need to solve the human element. This is one of the biggest problems you’ll solve,” Duffy said.

Being overly cautious, however, also has its problems. Behnke mentions that when Brain Corp ran some initial pilot tests with its floor cleaning machine, the robots were checking, pausing, and analyzing for the sake of safety so often it made humans less comfortable around them. People thought the robots weren’t intelligent, thus making them feel uncomfortable around the robots. It’s critical that you use sensor data from real-world scenarios and virtual environments to reduce false positives.

Installation Must be Simple

For your product to be genuinely scalable, the installation process must be simple, not technical. Many of today’s robots require an engineer for installation into a new environment. The process is simply beyond the skillset of non-technical staff. This in-depth and technically complex launch can bottleneck this critical early stage; having an engineer sent on-site to every new customer is not sustainable or scalable.

One way to counteract this challenge is to have your customers identify employees who might be capable of taking on installation as a new project. Another is to do some preventative maintenance regarding design. You want your robot to be aesthetically familiar to products your customer’s employees have used before. Make sure the user interface is lean and intuitive. Behnke uses the following example:

“We wanted to keep it as simple as possible… As you can see in this screenshot right here, there are only two choices for the user: Choose a route or teach a route.”

“And that’s the secret to dealing with non-technical employees that are using these machines. We’ve designed a system that is very easy to use. The user either uses the machine the way they always have and while they are doing that, it creates a map of the space and records the routes, or they set to play.”

Filed Under: The Robot Report

Tell Us What You Think!