The problem with data at the factory floor is that conditions constantly change. Sparse Modeling, an AI approach recently evolved from the academic space, may solve this problem as it needs only a small data set. One benefit—it works on ultra-low power embedded computing platforms.

By Dan Demers, Director, Sales & Marketing, congatec Americas

Artificial intelligence has great potential to improve the performance and accuracy of modern visual inspection systems. But conventional AI approaches have some drawbacks:

–Vision based Deep Learning must process every detail of a picture to provide reliable results. This is power and compute intensive – with lots of movement of data between processor and memory. In one hour, a 60 fps camera with UHD resolution and 8 bit color depth produces as much as 5.18 terabytes of uncompressed data for analysis.

— Conventional AI needs a massive number of pictures to make reliable predictions, which consumes a lot of time and energy. Plus, recent studies indicated that a single AI model based on Deep Learning technology pollutes the environment up to much as 5 cars during their entire lifecycle. Embedded systems cannot provide such computing performance; only datacenters can.

— The required training data for conventional Deep Learning based AI are not available within a few days or weeks. It can take a whole year or even longer to collect images of 1,000s of defective parts from a production line. If the production process is modified during that year, the definition of what is good or the nature of possible defects may have changed, rendering the previously gathered data virtually useless.

–Conventional AI always needs server grade training via Deep Learning before an inference algorithm can be compiled that is capable of making decisions at the smart factory edge. And each new set of pictures needs the same resource intensive training. The energy and time-wasting process is repeated again and again for each new inference model replacing the previous. It’s a vicious circle.

–As the backbones of the industrial world, embedded systems are reliable and fail-safe. They typically feature low compute power for fan-less operation in a fully closed housing. Embedding AI via inference logic into these systems leads to higher performance requirements. Embedded systems that have no additional headroom in their thermal envelope risk losing durability with AI. They also need a channel for the delivery of Big Data towards central clouds. This consumes additional energy and often produces costly data traffic. This is why until now the benefits of AI were reserved for high compute environments and the ability to transmit edge data to external clouds.

Sparse Modeling at the edge

Sparse Modeling offers a different approach and a broader path to bring AI to embedded low-power applications. It can continuously and dynamically adjust to changing conditions – such as lighting, vibrations, etc., or when cameras and/or equipment need to be moved – by re-training at the edge.

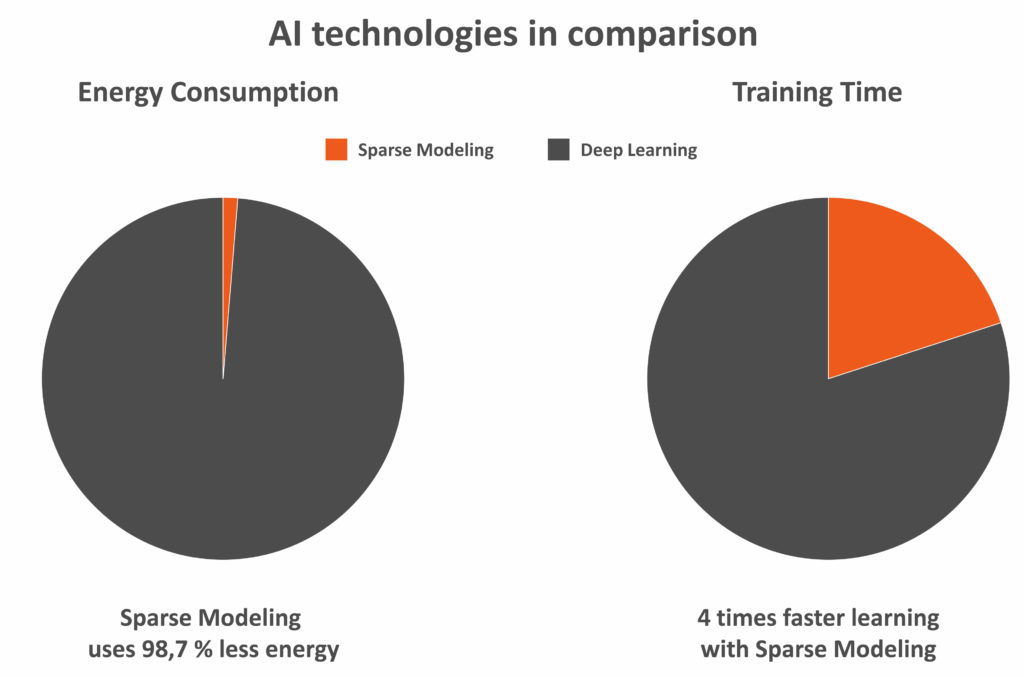

Tests have shown that for the same level of accuracy, Sparse Modeling consumes only 1% of the energy of a conventional Deep Learning platform. It is therefore a suitable AI technology for embedded systems.

Sparse Modeling understands data by focusing on identifying unique features. Sparse Modeling understands data similar to the way the human mind does. Humans recognize friends and family based on key features – such as eyes or ears. Sparse Modeling embeds a comparable logic into smart vision systems, so the entire volume of Big Data does not need to be processed, as with conventional AI. Sparse Modeling based algorithms consequently reduce data down to just the unique features.

When presented with new data, rather than scanning the entire new entry, Sparse Modeling looks for the occurrence of previously determined key features. An added bonus of this approach is that the isolated features are understandable to humans, so Sparse Modeling produces an explainable, white box AI – which is another differentiator compared to conventional AI.

The initial model creation stages, where the AI engine and customer specific data are merged to create a model tailored for the specific use case, rely primarily on human expertise. Standard new inference models require only about 50 pictures for initial model creation. This enables engineers to build next-generation inspection systems that don’t always need the best in class setup, e.g., when lighting conditions are constant. They also gain greater flexibility to adapt to changing production processes, which is essential for industrial IOT/Industry 4.0 driven lot-size-one production.

Sparse Modeling on embedded edge devices

A Sparse Modeling platform is lightweight and resource efficient and can be embedded into more or less any edge device. It can run on embedded x86 computing platforms and is poised for implementation on platforms such as Xilinx and ARM or Altera and RISC-V. Compatible with both mainstream x86 processors and currently emerging open source options, this makes the design future proof. However, as the final footprint depends on the task to be solved and the complexity of the model required, a modular hardware platform based on Computer-on-Modules is recommended.

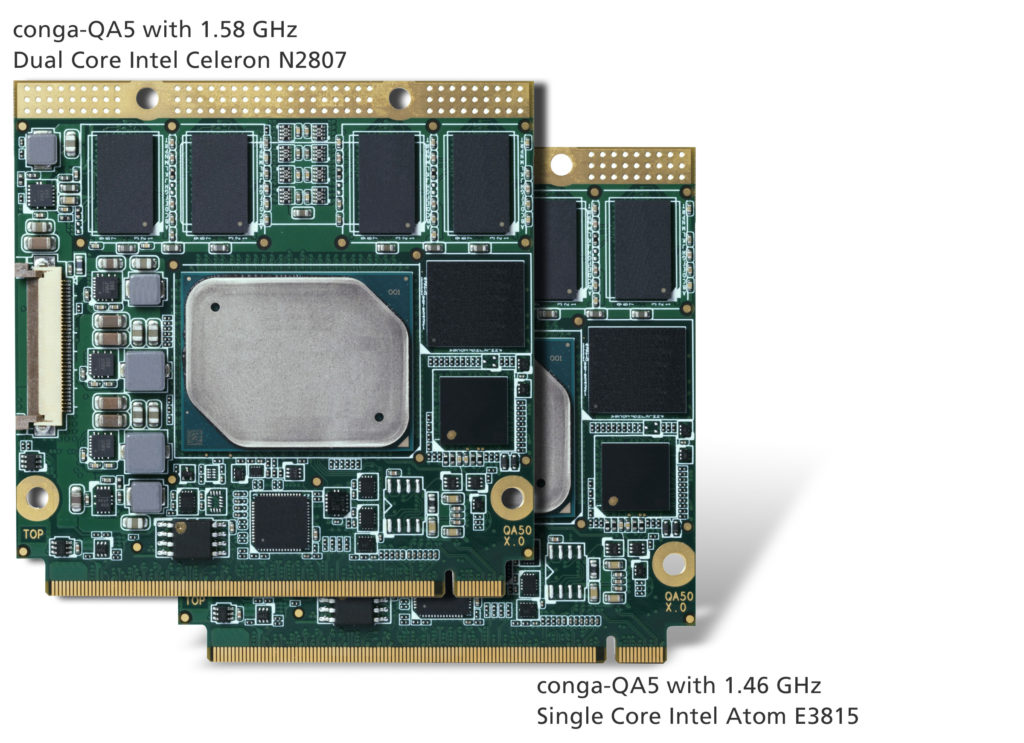

The first congatec conga-QA5 Computer-on-Modules supporting the Sparse Modeling software are based on Intel’s latest low-power microarchitecture code named Apollo Lake, which is available for series production.

A pioneer in Sparse Modeling in the manufacturing and medical fields is Hacarus. The company focuses on helping customers in industrial and medical use cases where rare conditions do not produce all the Big Data required to train a Deep Learning based AI model. Another application field is precision manufacturing, where edge nodes lack the compute power to perform inference and training in parallel, and where sending data to the cloud is not feasible for confidentiality or connectivity concerns.

Sparse Modeling is energy friendly

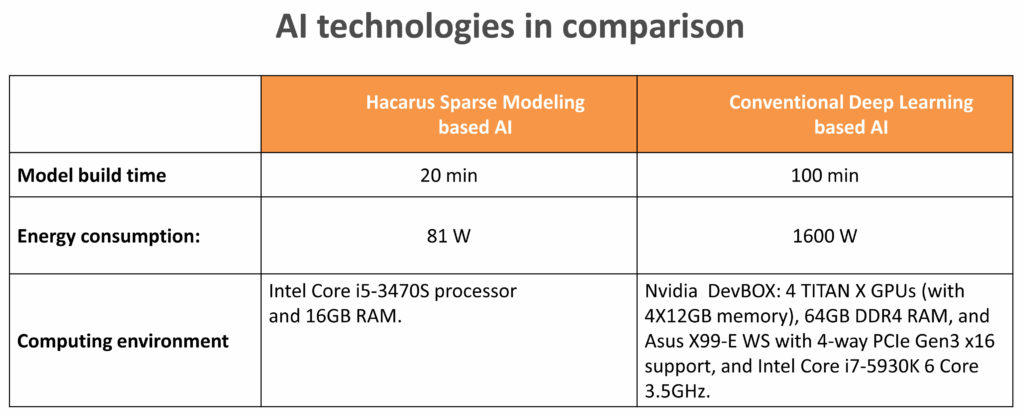

As part of a project with an industrial customer, Hacarus performed a comparison of its Sparse Modeling tool and a conventional Deep Learning based technique. For this sample study a data set of 1,000 images was used by both models to create predictions. The customer had defined the accepted model prediction probability as 90%.

Both approaches produced comparable results, but the required effort differed significantly: The Sparse Modeling based model was trained five times faster than the Deep Learning based model even though the Sparse Modeling tool ran on a standard x86 system with Intel Core i5-3470S Processor and 16 GB RAM. The Deep Learning model required an industrial-grade Nvidia DEVBOX based development platform with four TITAN X GPUs with 12 GB of memory per GPU, 64 GB DDR4 RAM, an Asus X99-E WS workstation class motherboard with 4-way PCI-E Gen3 x16 support and Core i7-5930K 6 Core 3.5GHz desktop processors.

The Sparse Modeling approach consumed 1% of the energy that the Deep Learning based approach used – with the same level of accuracy.

Only 50 pictures needed

The small required footprint and performance efforts make it easy for vision system OEMs to implement AI. Existing platform solutions can often be re-used and system integration is relatively straightforward as the Hacarus+ SDK (Software Development Kit) logic adapts to common vision inspection systems without too much change of the setup. While existing visual inspection systems can continue to perform their primary inspection, the software takes care of only those images that were identified as ‘not good,’ which means ‘maybe defect.’

With around 50 or less such images, the Sparse Modeling can begin building a new inspection model. Once it has been validated by human inspectors it is ready to run as a second inspection loop beside the existing platform, and will deliver the inspection results back to the established system via its APIs.

An optional HTML based user interface is available for monitoring efforts. It can also run stand-alone, but since vision data pre-processing is not the core competence of Sparse Modeling, connectivity to an existing vision logic is recommended. The Sparse Modeling tool can be implemented as a standard installation within the customer’s software environments or leverage a hypervisor isolated virtual machine cloud; even FPGA based implementations on custom carrier boards are possible to reduce the required power envelope further. (Version 1.0 of the AI Sparse Modeling tool from Hacarus is available as part of an instantly deployable starter kit. The kit can run the tool stand-alone or connected to existing vision systems.)

Sparse Modeling is factory-ready, with support for industry standard image acquisition channels such as GigE or USB 3.x. Installation requires 3 simple steps:

- Connect with the installed image acquisition system via the tool’s API;

- Capture images to train the algorithm;

- Tune the algorithm using human inspectors.

No external cloud training is needed. All AI training and inference-system based predictions run within edge computing devices. Easily trained on vision-based intelligence like ‘door is open’ or ‘switches are in wrong position,’ it is ultimately faster to train and implement than programmed solutions with many “if then else” lines of code, etc.

Sparse Modeling is also suitable for image data analytics and for analyzing time-series data. Useful for predictive maintenance purposes, this makes it an interesting IoT and Industry 4.0 edge logic for machine builders as well.

Starter kit with scalable hardware platform

The starter kit has been compiled by Hacarus in cooperation with Japanese semiconductor trading company PALTEK and can be deployed and tested in any GigE and USB 3.x environment. It is designed on the basis of the palm sized industrial box PC using standard Computer-on-Modules. The system measures 173 x 88 x 21.7 mm ( 6.81 in. x 3.46 in. x 0.85 in.). It is slim and offers excellent performance thanks to the latest Intel Atom and Celeron processors (Codename Apollo Lake) that are available for series production. The system suits low-power, high-performance applications based on x86 processors.

The Sparse Modeling platform integrates congatec Qseven Computer-on-Modules for most flexible performance scalability.

The system also has a rich set of I/Os enabling different setups at end users’ factory floors. Standard interfaces are 2 x GbE ready for GigE Vison, 1 x USB3.0/2.0, 4 x USB2.0 and 1 x UART (RS-232). Extensions are possible with 2 x Mini-PCIe with USIM Socket, 1 x mSATA Socket and 16-bit programmable GPIO. The range dc voltage input is 9 V-32 V.

Based on the Qseven Computer-on-Modules from congatec, the system offers flexibility in CPU selection and upgradeability on the basis of Intel’s CPU generations. One of the key benefits of the Computer-on-Module standard specified by the SGET standardization body for embedded computing technologies is that it supports both ARM and x86 platforms. This makes the low-power form factor module future proof as it is also suitable for new Sparse Modeling setups in line with customers’ evolving applications.

congatec

www.congatec.com/en

Hacarus Inc.

hacarus.com

Filed Under: NEWS • PROFILES • EDITORIALS, AI • machine learning