Innovations in sensing technology that won’t break the bank promise to help robotically piloted vehicles understand their surroundings.

Leland Teschler | Executive Editor

Lidar units used for digital mapping produce a characteristic 3D display defined by laser pulses reflecting from objects in the field of view. This display was generated by Velodyne LiDAR unit having a 360° field of view.

Look at one of the prototype autonomous vehicles cruising the highways and you may see a spinning cylinder perched atop the roof. This cylinder houses a light distancing and ranging (lidar) sensor. Initially, lidar sensors were used to generate digital maps for navigation software. Now they are a critical part of the plan for how future generations of autonomous vehicles will sense what’s happening around them to prevent collisions.

Lidar units basically bounce a laser beam off a target and use the return time to measure distance. This time-of-flight (TOF) measurement can resolve the dimensions of objects as far as 300 m away to within a few centimeters, and they can provide this information in milliseconds.

One problem: Lidar units have historically been expensive. For example, consider the Lidar units that sat atop entries in the 2005 Darpa Grand Challenge, the event credited with launching autonomous vehicle technology. Developed by industry pioneer Velodyne LiDAR, their cost was in the $10,000 range largely because they used a precision rotating platform to move their laser beams and detectors across the surroundings.

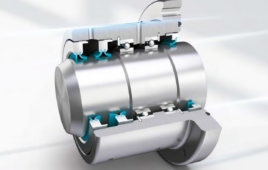

Industry pioneer Velodyne LiDAR developed the first lidar units for the Darpa autonomous vehicle Grand Challenge. The 360° field-of-view that characterized the early lidar units is still available in modern units such as Velodyne’s Puck LITE. As in the Grand Challenge lidar, the Puck LITE rotates both its lasers and detectors. The rotation rate of the head is programmable and effectively sets the frame-rate of the resulting video. A lower rotation rate gives a higher effective horizontal resolution, but a lower frame rate, and vice versa. A rotation sensor allows the end user to manage this trade-off. The units can rotate from a 5 to a 20-Hz rate.

Clearly, this kind of price point won’t work for mass-produced autonomous vehicles. So today, manufacturers are devising lidar units that are more economical than those guiding the Darpa Challenge cars. Lidar designs targeting mass-produced autonomous vehicles have been announced costing a few hundred dollars or less.

One way manufacturers are reducing lidar cost is by eliminating the need for a precision spinning platform as found in Darpa Challenge lidars. The spinning optical platform approach has the advantage that it can create a 360° field of view (FOV). Lidars employing spinning platforms are still in use but now tend to mainly show up in aerial mapping or in inspecting vegetation as carried out by quadcopter drones.

But many lidar units now on the drawing boards for automotive use don’t employ a 360° FOV. Instead, they look out in one direction with an FOV of perhaps 100° horizontally. To get a 360° coverage area, autonomous cars will likely carry three or four of these limited-view lidar units.

There are several ways of realizing limited FOV lidar. One technique, sometimes dubbed flash lidar, captures an entire scene with a single array of laser diodes. It generally uses a 3D array of pixels, analogous to that in ordinary digital cameras but with the additional ability to record a 3D depth and intensity. Each pixel records the time the laser pulse takes to bounce back to the sensor. So each pixel records the depth and location as well as reflective intensity of the reflection it sees. A high-speed processor calculates the physical range of the objects in front of the camera. In lidar parlance, the resulting information is called a 3D point cloud frame and it is generated at video rates, generally up to 60 frames/sec.

Some lidar units use micromirrors to scan their laser across targets. An example of a device implementing this technique is one from LeddarTech. Its scanning-type lidar unit covers a FOV of 60°×20° with a resolution of 0.25°×0.3° and can detect pedestrians more than 200 m away. In operation, a laser diode pulses a collimated beam towards the micromirror which is oscillating at a high frequency on a single axis. The laser diode synchronizes it’s pulses with the micromirror in a way that results in multiple scans of the horizontal FOV when the micromirror redirects the beam toward a diffuser lens. The lens doubles the angle of orientation of the beam and diffuses the laser pulse vertically. Targets in the FOV reflect back light that gets captured by a receiver lens and redirected to an array of photodiodes. The array segments each vertical signal into multiple individual measurements to build a 3D matrix covering the entire FOV. The resulting information is digitized and sent to an image processor.

Another technique for generating limited FOV lidar uses a MEMS micromirror to steer a laser beam across a scene in the style of a raster pattern. An example of such a system is that devised by LeddarTech. It uses a pulsed laser diode whose beam bounces off a MEMS micromirror which oscillates rapidly on a single axis at a time. The micromirror sends the beam to a diffuser lens which doubles the angle of orientation of the beam and diffuses the laser pulse so it hits targets on the vertical FOV. A photodiode array detects the backscattered light coming from the targets. Meanwhile, the micromirror movement and laser diode pulses are synchronized with the movement of the micromirror in a way that scans the horizontal FOV in multiple lines, raster style. The detector array segments each vertical signal in multiple individual measurements to build a 3D matrix representing the targets in the FOV.

GM buys a lidar maker

Unfortunately, the time-of-flight measurements implemented by traditional lidar units have their share of issues. For example, simple TOF measurements are prone to interference from other signal sources, and the interference worsens with distance because of the weaker signals involved. And the useful range of TOF lidars depends on how well they detect the relatively faint reflected signals. Making the lidar photodetectors more sensitive also makes them more susceptible to interfering signals.

The Oewaves patent describes the operation of its whispering gallery mode optical resonator – likely to be incorporated in the Strobe lidar system — using this arrangement. A source laser beam couples into a whispering gallery mode resonator using an optical coupler such as a prism through a phase rotator and a lens. A subset of the frequencies represented in the laser beam propagates as a self-reinforcing whispering gallery mode wave a through the resonator that is “captured” in the whispering gallery mode. A portion of this propagated light couples out of the resonator by a second optical coupler. The output light beam gets reflected by a mirror to provide a reflected light beam. The reflected light beam couples back into the resonator to form a counterpropagating wave. This wave couples out of the resonator via the first optical coupler and returns to the source laser as a feedback light, which narrows the linewidth of the laser output. The narrowed linewidth output of the source laser can be output through an exposed facet of the first prism and used in a lidar system.

Difficulties inherent in ordinary lidar may be one reason General Motors recently acquired Strobe Inc., a small California startup developing a sub-$100 solid-state lidar for self-driving cars. Strobe’s approach to lidar differs from that of other manufacturers. It produces brief chirps of frequency-modulated (FM) laser light in the style of chirped radar, where the frequency within each chirp varies linearly. Detectors measure the phase and frequency of the echoing chirp. This gives information not only about the distance of targets but also their relative velocity. Moreover, the returns are said to be less susceptible to interference (because interfering signals are generally not modulated) and can be detected with photodetectors that needn’t be super sensitive.

The idea of FM chirp-based lidar isn’t new, but it has depended on factors that include the linewidth limitations of the emitting laser, the range of frequencies within the chirp, the linearity of the frequency change during each chirp, and the reproducibility of individual chirps. Improving one of these factors tends to make the others worse. And FM lidar systems developed to date generally have relied on relatively large laser sources and on a carefully modulated, low-noise local oscillator with the FM provided by a relatively large interferometer. All in all, these setups have been complicated and bulky.

The Strobe lidar gets around the bulkiness problem by using a technique devised by another company called Oewaves, Inc. which was founded by one of Strobe’s principals. Called a “whispering gallery mode” optical resonator (i.e. resonating optical cavity), it reduces the laser’s linewidth via light feedback. The “whispering gallery” refers to a type of wave that can travel around a concave surface. Though the idea originated with sound waves in cathedrals, it can apply to light waves circulating with little attenuation inside tiny glass spheres or toruses.

Here is how the Oewaves patent describes a lidar system built around its FM chirp scheme for an advanced driver assistance system (ADAS). An FM laser couples to an optical resonator which can support one or more whispering gallery propagation modes. Light couples out of the optical resonator to provide optical injection locking of the FM laser and substantially reduce its linewidth. To FM the laser, the optical properties of the optical resonator are changed (for example by heat via a resistance heater, pressure via a piezoelectric device, and/or the application of a voltage potential via an electrode), to alter the wavelength associated with a whispering gallery mode. The resulting light frequency is fed back to the laser via injection locking to alter the laser light output frequency. A chirp generator is employed to produce optical frequency chirps from the FM laser. An optical switch directs the transmitted optical frequency chirp to an optical scanner. A scan clock controls the scanning rate and/or chirp generation rate and can make adjustments depending on environmental and/or traffic conditions. Chirps reflected from targets are directed by the scanner to a photocell/amplifier. Amplified electrical signals corresponding to the reflected and retained chirp are processed in a fast Fourier transform engine and go to a data processing engine that estimates both the distance between the lidar emitter and the reflective object and their relative velocity.

You can get a general sense of how the Strobe lidar works by reviewing the Oewaves patent. As the patent describes, light from the laser couples into the whispering gallery mode optical resonator and then couples back out as a returning counterpropagating wave that has a frequency equal to that of the optical resonator’s standing wave frequency. This returning wave gets injected into the laser and has the effect of locking it to the resonator frequency. It also reduces variations in the amplitude of the laser light (relative intensity noise, or RIN) which can degrade FM lidar performance.

So far so good. What’s noteworthy about the technique is that it seems to be a way of modulating the optical properties of the whispering gallery mode optical resonator. Frequency modulation of the resonator optical properties is what provides a method for producing highly linear and reproducible optical chirps for the lidar system.

As is often the case with patent applications, several of the technical details in Oewaves’ lidar scheme are only vaguely described. For example, regarding the FM technique, it only says that a transducer (via electrodes, resistive heater, and/or piezoelectric device) can alter an optical property (for example, the refractive index) of the whispering gallery mode optical resonator. In any event, Oewaves says all these components, even the spherical or torus-shaped resonator, can reside on a single substrate.

The patent also says the linewidth of the optical-injection-locked laser can be less than 100 Hz in some cases. This is important because the narrow linewidth helps maintain light frequency reproducibility from one chirp to the next. Such a laser source can provide linear chirps with large bandwidths of 15 GHz or more which can make for lidar able to resolve distances down to less than a centimeter is some cases.

Different lidars units employ different kinds of light detectors and detection techniques depending on the ranges involved, as summarized by light detector supplier Hamamatsu. Besides making time-of-flight measurements, lidar optimized for short-range use (a few tens of meters) may implement a triangulation scheme that measures the position of the reflected light relative to the light source. Also used are indirect methods that measure the phase difference between the laser and the returning light. Detectors for short-range lidar units tend to be silicon PIN photodiodes. As detection range rises the intensity of the laser light backscattered from targets falls off. Thus medium-range lidar tends to use avalanche photodiode detectors because they can provide signal gains in the 10 to 100 range. Lidar units ranging out to hundreds of meters may use silicon photomultiplier detectors. These are basically avalanche photodiodes operating in what’s called Geiger mode: A single photon causes avalanche current through a p-n junction that is reverse-biased well above the breakdown voltage. The signal gain for such devices can be on the order of 106.

It looks as though we won’t have long to wait to see lidar with these capabilities. Strobe has said it expects to produce its first commercial product next spring.

You may also like:

Filed Under: Commentaries • insights • Technical thinking, Automotive, Sensor Tips, MORE INDUSTRIES

Hello!

The paragraph quoted in your article is faulty. The spinning approach to Lidar used today in cars, used for Google cars for 8 years, used by Caterpillar 8 years, will continue far into the future. It is the Lidar of choice for nearly all test fleets for autonomous driving today. The “low cost” TOF flash and other Lidar mentioned in your article have issues to solve before they will SAFELY be used for autonomy. They must be seamlessly stitched together, with errors and disortion. What is the cost of its processing? What is its accuracy with the stitching issues? What is the cost of computation and do you need a computer the size of your car trunk to process it? How expensive is the processing for this? Has it been tested for accuracy? Has it been tested for range? Has even one been on the road to drive a car autonomously?

Compared to spinning Lidar, which is shipping to customers, these unproven concepts are a hope and a dream to make money. The spinning Lidar is patented, otherwise makers of these cheap Lidar would spin. The “cheap” Lidar may prove to be very expensive and not nearly as good. Also, spinning Lidar is coming down in price to meet the demand for not just Autonomy but Advanced Safety, ADAS solutions. If you investigate the subject, you will see spinning Lidar showing up in all Autonomous cars. Other Lidar solutions for driving are far from proved out. They may be suitable for Parking Assist.

“One way manufacturers are reducing lidar cost is by eliminating the need for a precision spinning platform as found in Darpa Challenge lidars. The spinning optical platform approach has the advantage that it can create a 360° field of view (FOV). Lidars employing spinning platforms are still in use but now tend to mainly show up in aerial mapping or in inspecting vegetation as carried out by quadcopter drones.”