Avionic and aerospace system designers are always seeking greater computational processing capabilities coupled with smaller size, less weight and lower power consumption (SWaP). In recent years, they’ve transitioned their systems from discrete processor devices or clusters of processors to multicore system-on-a-chip (SoC) devices. The SoCs have delivered the performance needed, but they’ve brought with them a layer of complexity and a new set of challenges.

Determinism

The safety of people usually depends upon the reliability and dependability of avionic systems. As a result, these systems must consistently perform as expected. The execution of every task in a system, for example, must be predictable and repeatable every time it is run. In other words, the operations of the system must be deterministic. One metric of determinism would be time. Tasks must execute within a certain window of time, but, in a multicore SoC with shared resources, other complications, if not controlled, can effect unexpected and unpredictable outcomes.

Previous generations of avionic architectures could segregate the processes or tasks that make up the system. A certain processor device with its own dedicated memory might be charged with communications to the outside world, for example. Segmentation this robustly delineated is more difficult with a multicore SoC, but, the sharing of resources that is inherent in SoC-based systems is quite advantageous with respect to SWaP. Dedicated resources can often sit idle, yet drive up costs, size, weight and power consumption. Today’s SoC-based architectures take advantage of shared resources and virtualization strategies to minimize SWaP and maximize system performance. One challenge with regards to virtualization is the increased complexity it adds to the system and the effects this has on determinism.

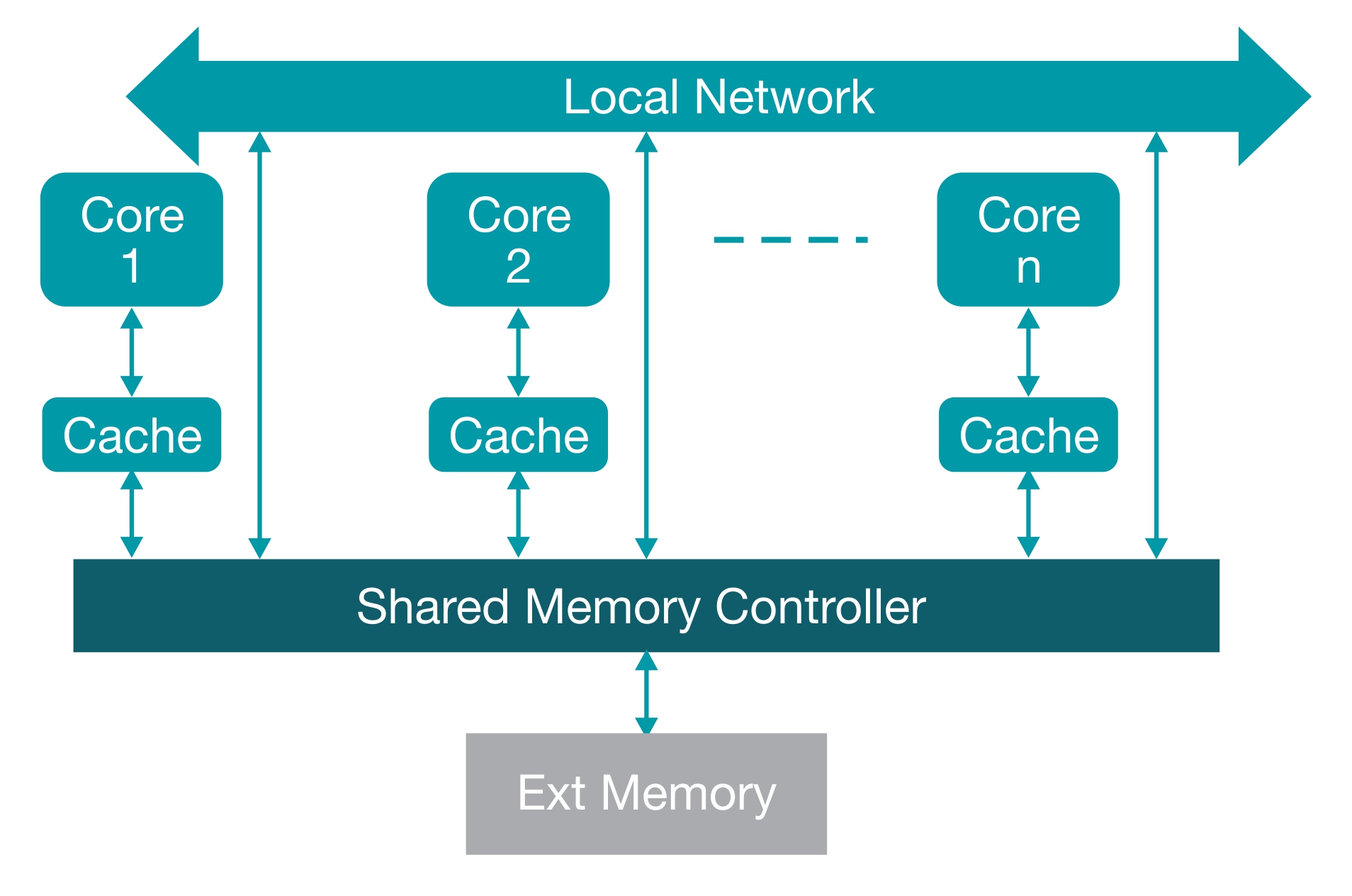

Another factor which takes its toll on determinism is the increasing size and complexity of the software running on avionic systems. As hardware elements have been integrated into one SoC, software elements have become inextricably intertwined in large multithreaded code bases. In many cases, the large software footprint in today’s avionics systems will require external memory resources, usually in the form of DDR memory. This then raises the issue of what sort of cache memory architecture and methodology will be adopted so that the system will meet its performance and throughput requirements.

Figure 1: The various levels of a cache architecture.

Although the presence of cache memory in system architecture is not a new or unstudied approach, it will affect the system’s determinism. The overly simplistic solution would be to ensure any critical data or code is locked into cache or resides in high-performance on-chip SRAM memory. While this will ensure that the average throughput or performance of the system can be maintained, disruptive or unexpected events will occur and they can slow the processing of tasks that are less than mission critical and make their execution unpredictable. In addition, when multiple tasks are running on multiple cores, each task may be accessing shared DDR memory and caching critical data locally. In such cases, clear and distinct separation of each task from the others must be maintained so that no task’s execution affects the execution of any other task. Another point related to introducing cache memory is one of data coherence. Data that is shared by different cores or by a core and a peripheral or coprocessor that is maintained as coherent in hardware will reduce or eliminate in some cases the need to perform manual cache flushes and use other barrier mechanisms related to that shared data in order to maintain the coherence. This is beneficial both for determinism and for performance in general.

While software footprint and cache are being addressed it is especially important to keep a focus in this area when introducing the complexities of today’s SoC integration. Some of the complexities of integration can be managed with virtualization while at the same time creating additional considerations for determinism.

Virtualizing the execution of tasks across multiple processor cores often involves shared memory spaces, which are also virtualized. For example, it might appear to two tasks running on two different cores that they are communicating through the same memory space, when, in reality, two different physical locations in memory have been allocated, one to each task. The virtualization of memory space is handled by one or more memory management units (MMU) where memory address translation tables are contained. Each task might be assigned a transaction look-aside buffer (TLB) for its own memory address translation table. As long as the local TLBs are properly maintained, the tasks will operate with determinism, but if a local TLB is unexpectedly moved or written over for whatever reason, then the TLB must be reconstituted through a table walk. When this happens, the determinism in the system with regards to these tasks may be lost.

With multiple cores plus peripherals and coprocessors some of the data accesses to a common resource, like DDR or a large on-chip shared memory, will be of a real-time, critical nature while others may be of a lower priority or best average performance nature. Given the ability to define priority and quality of service the highest priority real-time accesses can be given the advantage during times of congestion. This results in greater determinism.

Interference elimination

Another issue avionic system designers must address is eliminating or limiting processing interference among tasks on multiple cores. Although distinct from determinism, interference can also affect the degree of determinism in the system. Whereas determinism is typically defined in terms of the system’s performance, interference affects the integrity of data in use in the system.

An example of interference would be when two tasks are executing concurrently on different or the same core and both tasks are accessing the same memory space. One task might be more critical to the safety of the human operator while the second is a routine housekeeping task. In such a case, the housekeeping task must not be able to alter the contents of shared memory if it would impede the execution of the mission critical task. In these types of situations, MMUs or memory protection units (MPU) that are associated with a given core can be programmed to restrict access to memory addresses while a certain configuration of the system is in effect. But even this solution is fraught with complexity.

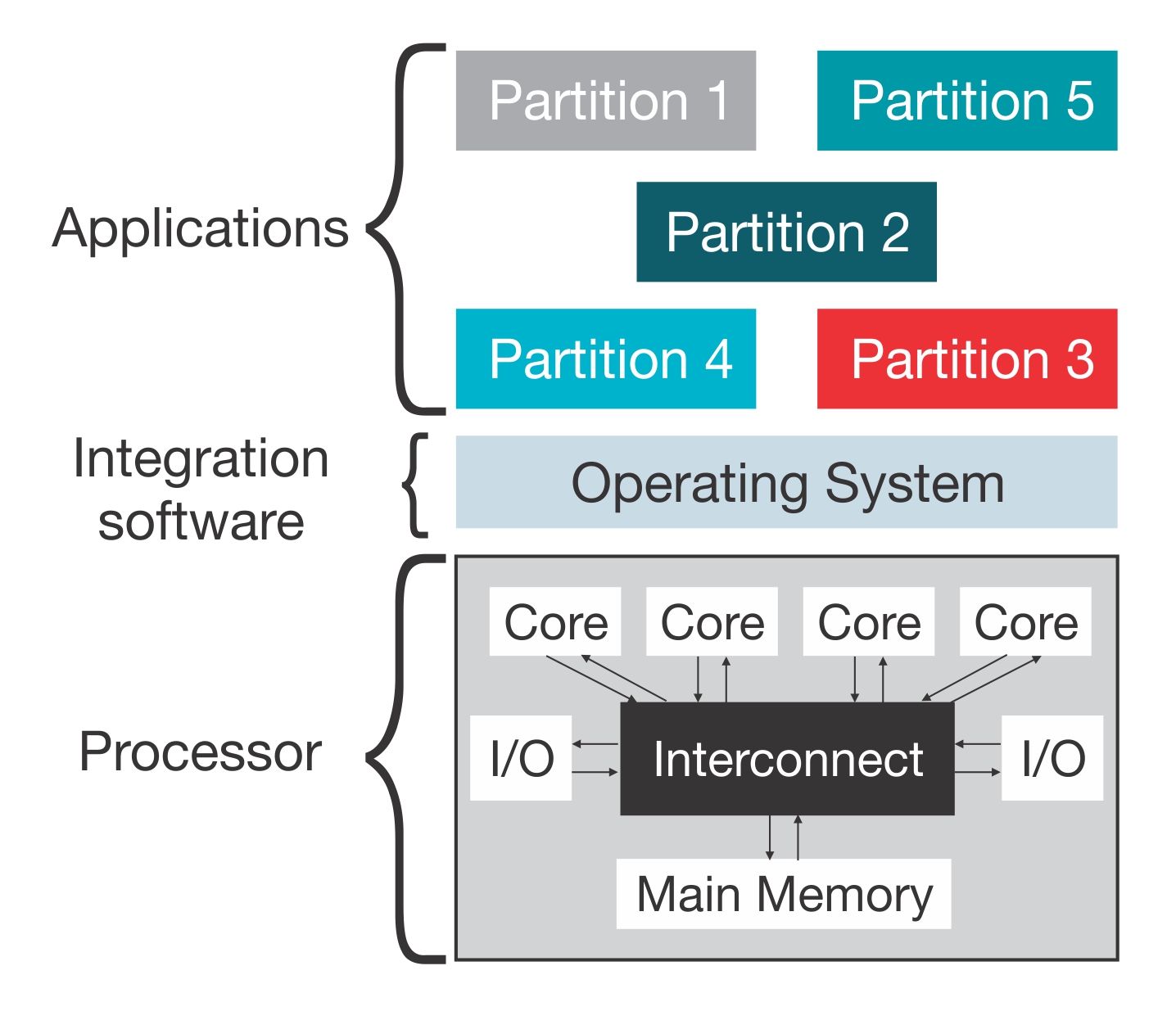

Many SoCs in avionic systems are heterogeneous, indicating a variety of processing core types, each with its own instruction set architecture (ISA). During system operations, the integrity of any one core could depend on the integrity of all of the other cores or the integrity of one or more sets of cores. Often, the configuration of the MMU for a core or a set of cores with the same ISA and common hypervisor, is handled at the hypervisor level where the configuration of all MMUs can be maintained and coordinated so that tasks will not interfere with each other. This becomes a challenge in devices with heterogeneous cores where a common hypervisor level may not be feasible.

Again, a key element with regards to eliminating interference is the robust partitioning of tasks in time and space. This can be accomplished through a number of means, like firewalling off certain resources, establishing master/slave relationships among cores and tasks, or generally restricting the use of key resources. For example, one task on a certain core might be designated as the master of access to an I/O interface such as the system’s PCI Express (PCIe) port. All traffic over PCIe would be processed through this master task and it would also be responsible for managing the configuration of the interface.

Figure 2: Software framework.

Environmental performance

The effects of the environment are not really a new challenge for deterministic operation; however, with increased integration at the SoC level more components are packed into a smaller area and no discussion of determinism would be complete without discussing these impacts. Environmental performance, or how the avionics system performs when subjected to the extreme temperatures of high altitude as well as radiation events, is also a critical consideration to ensure the system’s reliability and survivability. Of course, the junction temperature of all semiconductor devices, including SoCs, must be evaluated, but additionally the effects that various failure conditions, such as single event upsets (SEU), single event latchup (SEL), single event gate rupture (SEGR) and single event burnout (SEB), have on the system’s determinism should be investigated and mitigated accordingly. For example, a radiation event might alter a bit in memory or flip a logic gate, but additional safeguards like error correction code (ECC) or double ECC can be built into the memory subsystem so that unexpected processing results might be avoided and the system’s determinism ensured.

Conclusions

Even though SoCs offer avionic and aerospace system designers a bounty of processing capabilities while minimizing SWaP, they do present challenges that legacy system architectures could overcome by segregating functional elements and other resources on discrete semiconductor devices. SoCs don’t afford such a luxury because they rely on shared resources and multitasking processing cores. Among the chief concerns for developers who are designing SoC-based avionic system are the system’s determinism, the elimination of interference, which can affect data integrity, and system performance and reliability in extreme environmental conditions. All of these issues can be effectively addressed in SoC-based systems through various means, including robust space and time partitioning, various system-wide coordination techniques like hypervisory oversight, and designing in extra safeguards to overcome unforeseen events.

Filed Under: Aerospace + defense