By Edited by: Natasha Townsend, Associate Editor

Determine the DAQ’s required frequency input and output signals, and the appropriate applications to make a device run.

With many data acquisition (DAQ) devices to choose from, it can be difficult to select the right one for specific applications. Thus, it is important to understand the different types of signals and their corresponding attributes used to measure or generate a device. Signals use sensors (or transducers), which are devices that convert a physical phenomenon into a measurable electrical signal, such as voltage or current. The ability to send a measurable electrical signal to a sensor creates a physical phenomenon. For this reason, use the signals needed in the application to consider which DAQ device to use.

Understanding the various functions of a DAQ device before making a solid decision helps narrow down the choices for the application. DAQ devices function to convert or send: 1) Analog inputs to measure analog signals; 2) analog outputs to generate analog signals; 3) digital inputs/outputs to measure and generate digital signals; and, 4) counter/timers to count digital events or generate digital pulses/signals.

There are devices that are dedicated to just one of the functions listed above, as well as multifunction devices that support them all. Multifunction DAQ devices have a fixed channel count, but offer a combination of analog inputs/outputs, digital inputs/outputs, and counters. Multifunction devices support different types of I/O, which gives the ability to address applications that a single function DAQ device would not. It is not difficult to find DAQ devices with a fixed number of channels for a single function, including analog inputs/outputs, digital inputs/outputs, or counters; however, consider a device with more channels than is currently needed for increased channel count, if necessary. Obtaining a device that only has the capabilities for the current application will be difficult to adapt the hardware to new applications in the future.

There are other options to choose from depending upon the application. A modular platform gives you the ability to customize the device to your exact requirements. The purpose of a modular system is to control timing and synchronization with a variety of I/O modules in a chassis. An advantage of a modular system is the selection of different modules that have distinct purposes; so more configurations are possible. With this option, seek modules that perform a function more accurately than a multifunction device. Another advantage of a modular system is the ability to select the number of slots for the chassis. A chassis has a fixed number of slots, but is also available with more slots than needed to give the ability to expand in the future.

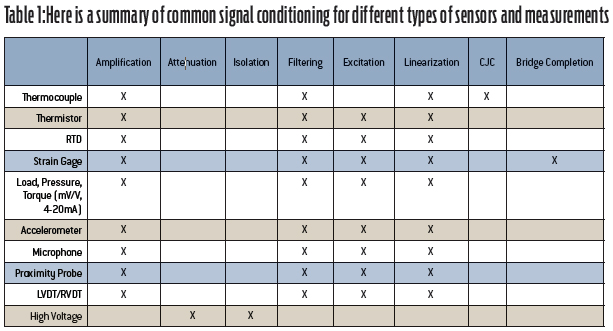

Table 1 provides a summary of common signal conditioning for different types of sensors and measurements. Determine the sensor type listed in Table 1 and consider signal conditioning by adding external signal conditioning or choosing a DAQ device with built-in signal conditioning. Many devices also include built-in connectivity for specific sensors for convenient sensor integration.

Another aspect to consider is to acquire or generate samples of signals. One of the most important specifications of a DAQ device is the sampling rate, which is the speed at which the DAQ device’s analog-to-digital conversion (ADC) takes samples of a signal. Typical sampling rates are either hardware or software timed and are up to rates of 2 MS/s. The sampling rate for the application depends on the maximum frequency component of the signal being measured or generated.

The Nyquist Theorem states how to accurately reconstruct a signal by sampling two times the highest frequency component of interest. However, you may want to sample, at least, 10 times the maximum frequency to represent the shape of the signal. Choosing a DAQ device with a sample rate at least 10 times the frequency of the signal ensures a more accurate measurement or generated representation of the signal.

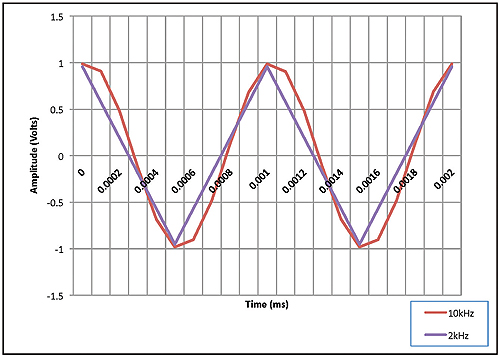

To illustrate, suppose the measurement of the application is a sine wave that has a frequency of 1 kHz. According to the Nyquist Theorem, sampling at a 2 kHz frequency, at least, is sufficient. However, sampling at 10 kHz frequency to measure or generate a more accurate representation of the signal is recommended. Figure 1 compares a 1 kHz sine wave measured at 2 kHz and 10 kHz frequencies.

Figure 1. 10 kHz Versus 2 kHz Representation of a 1 kHz Sine Wave

Choosing a DAQ device with the appropriate sampling rate for the application from measuring or generating can be done once the maximum frequency component of the signal is known.

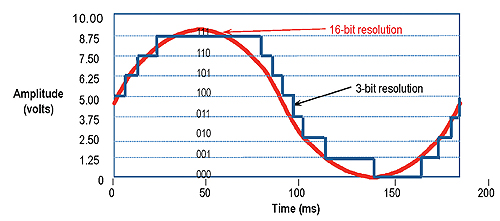

The smallest detectable change in the signal determines the resolution that is required in the DAQ device. Resolution refers to the number of binary levels an ADC uses to represent a signal. To illustrate this point, imagine how a sine wave would be represented if it were passed through an Adc with different resolutions. Figure 2 compares a 3-bit ADC and a 16-bit ADC. A 3-bit can represent eight (23) discrete voltage levels. A 16-bit ADC can represent 65,536 (216) discrete voltage levels. The representation of the sine wave with a 3-bit resolution looks more like a step function than a sine wave where the 16-bit ADC provides a clean-looking sine wave.

Figure 2. 16-Bit Resolution Versus 3-Bit Resolution Chart of a Sine Wave

Typical DAQ devices have voltage ranges of +/-5 V or +/-10 V. The voltage levels that can be represented are distributed evenly across a selected range to take advantage of the full resolution. For instance, a DAQ device with a +/-10 V range and 12 bits of resolution (212 or 4,096 evenly distributed levels) can detect a 5 mV change, where a device with 16 bits of resolution (216 or 65,536 evenly distributed levels) can detect a 300 μV change. Many application requirements are met with devices that have 12, 16, or 18 bits of resolution. However, measuring sensors with small and large voltage ranges, are likely beneficial from the dynamic data range available with 24-bit devices. The voltage range and resolution required for the application are primary factors in selecting the right device.

Accuracy is defined as a measure of the capability of an instrument to faithfully indicate the value of a measured signal. This term is not related to resolution; however, accuracy can never be better than the resolution of the instrument. To specify the accuracy of measurements taken depends on the type of measurement device. An ideal instrument always measures the true value with 100 percent certainty, but in reality, instruments report a value with an uncertainty specified by the manufacturer. The uncertainty can depend on many factors, such as system noise, gain error, offset error, and nonlinearity. A common specification for a manufacturer’s uncertainty is absolute accuracy. This specification provides the worst-case error of a DAQ device at a specific range. An example calculation for a National Instruments multifunction device’s absolute accuracy is given below:

Absolute Accuracy = ([Reading*Gain Error] + [Voltage Range*Offset Error] + Noise Uncertainty) Absolute Accuracy = 2.2 mV

It is important to note that an instrument’s accuracy depends not only on the instrument, but also on the type of signal being measured. If the signal being measured is noisy, the measurement’s accuracy is adversely affected. There are wide ranges of DAQ devices with varying degrees of accuracy and price points. Some devices may provide self-calibration, isolation, and other circuitry to improve accuracy. Where a basic DAQ device may provide an absolute accuracy over 100 mV, a higher performance device with such features may have an absolute accuracy around 1 mV. Understanding the accuracy requirements, enables choices of a DAQ device with an absolute accuracy that meeting the application needs.

Discuss this on The Engineering Exchange:

National Instruments

www.ni.com

Filed Under: TEST & MEASUREMENT, ELECTRONICS • ELECTRICAL

Tell Us What You Think!