Choosing the appropriate motor has never been a simple process because no one aspect of it could take precedence. And the new energy efficiency standards are adding another dimension that might further complicate selection processes, which equipment designers must immediately address.

As part of its effort to move toward greater energy independence and security, Congress passed the Energy Independence and Security Act of 2007 (EISA). It aims to increase production of renewable energy; increase efficiency of products, buildings and vehicles; and promote research and deployment of greenhouse gas capture and storage. Although EISA is wide-ranging in scope, only one page focuses on motor efficiency, which will significantly complicate motor design and selection for machinery designers. Motors manufactured after December 19, 2010 must comply with the new rules defined in EISA, and the time to begin the re-design process is now.

The law

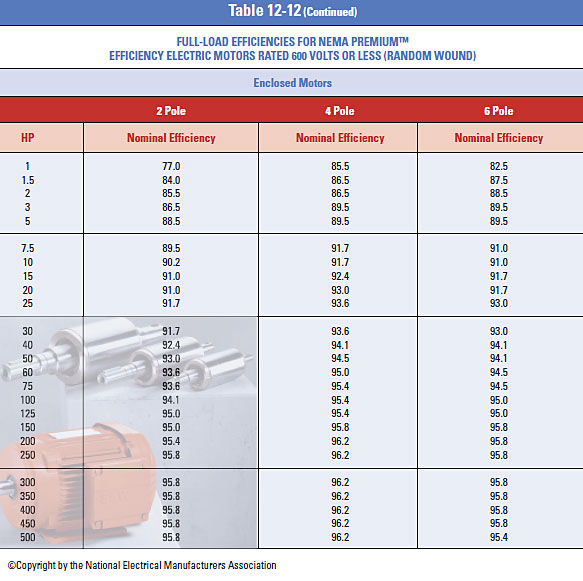

First, the legislation calls for a significant jump in motor efficiency and, for the first time, requires OEMs to comply. For instance, a 5.0 hp, 4-pole TEFC induction motor would be expected to have a minimum efficiency of 87.5% under the old legislation and 89.5% under the new one. This is because the old minimum energy efficiency standards mainly applied to three-phase general-purpose induction motors. Under the old act, the energy efficiency levels for induction motors were known as EPAct levels. The new regulation re-classifies them as Subtype I, so these motors, either manufactured alone or as part of another piece of equipment, will be required to have nominal full-load efficiencies that meet the levels defined in NEMA MG-1 (2006) Table 12-12, which is also known as NEMA Premium® efficiency.

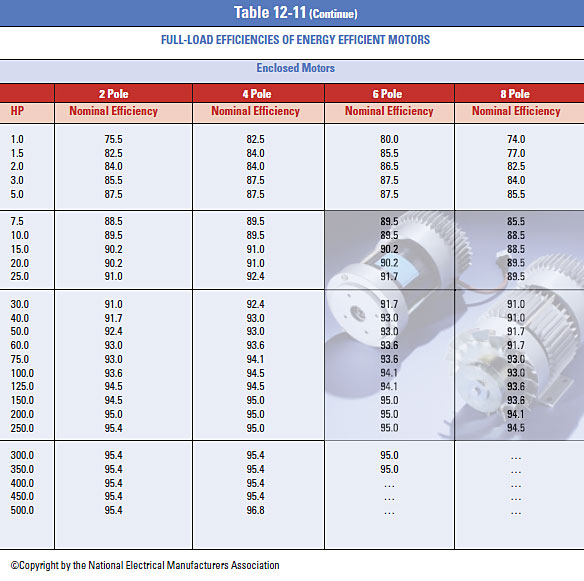

A completely new category of motors was created under the EISA law, which regulates efficiency for motor designs that were not covered under previous legislation. The motors are called Subtype II and defined as motors that incorporate design elements of general purpose motors (Subtype I), but are configured as U-frame motors, Design C motors, close-coupled pump motors, footless motors, vertical solid shaft normal thrust motors that are tested in a horizontal configuration, eight-pole motors (900 rpm), and polyphase motors with less than 600 volts. Subtype II motors between one and 200 hp and NEMA Design B motors with horsepower ratings above 200 hp (but not greater than 500 hp), and fire-pump motors are required to have nominal full-load efficiencies as defined in NEMA MG-1 (2006) Table 12-11, also recognized as EPAct efficiency levels.

In addition to these new laws for polyphase motors, small motors (including single-phase) are going through a public rule-making process at the U. S. Department of Energy (DOE) that is expected to establish minimum efficiency standards for the first time.

Changing priorities

Rising energy costs and the new legislation are now moving efficiency to the top of the checklist. The factors traditionally believed most important in an OEM designer’s evaluation of a motor are price, size, noise-level, reliability and weight. A recent survey of motor manufacturers showed that their customers ranked availability, reliability, and price as the top three concerns. While efficiency is important, it is often considered after all other parameters that affect the overall machine’s design–until now.

Some manufacturers are now promoting induction motors with cast copper rotors because they exceed the NEMA Premium® efficiency levels, which prompt experts to speculate about the possibility of a super-premium efficiency category. Although recent tests conducted by Advanced Energy substantiated these claims, the motors with cast copper rotors are currently only available in ratings of 20 hp or less. This means the proposed new level of legislation should remain at levels in Table 12-12 (NEMA Premium®). With today’s current technology and rising material costs, efficiency levels above NEMA Premium® for induction motors become impractical.

Efficiency regulations currently apply only to induction motors, but other motor types tend to have higher efficiencies such as permanent magnet (PM) motors. Because the excitation comes from the magnets, one set of windings can be omitted, so the losses can be lower in PM motors than in induction motors.

Currently, single-phase motors have no minimum energy efficiency standards, but the DOE is closely monitoring this category for possible new legislation, and there may also be a change in the standards for the testing. The general expectation is that this would be the ultimate standard for evaluating efficiency of single-phase motors when a rule is established.

Motor drive techniques

So what’s an OEM to do? What options do they have to meet these new standards in a cost-effective, timely manner? Sometimes switching to a different category of motor, such as from a shaded-pole motor to a permanent split capacitor motor will suffice. These changes may also involve a change in control scheme.

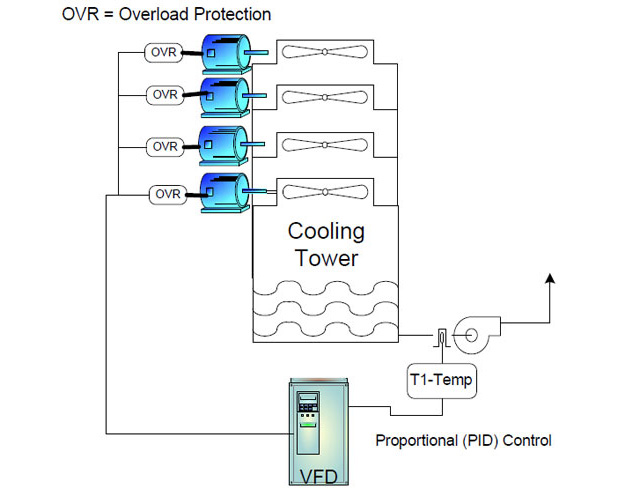

For instance, single-phase induction machines (specifically, permanent split-capacitor motors) and universal motors, widely used in industrial washers, are managed with simple voltage-control techniques. Contrast this with high-end, high-performance machines where three-phase motors are more common. Here, variable frequency control (VFD) schemes can be found.

Switch reluctance motors (SRMs) are not yet an appropriate alternative because their control schemes are still evolving, but three-phase motors are readily available and may be a smart choice because their VFD control techniques have improved significantly. More important, VFD electronics costs have been dropping. The result: Three-phase motors are increasingly attractive for low cost models.

In the same way, an OEM using a universal motor with simple triac control may now find that a three-phase VFD control will provide better energy efficiency, while OEMs using three-phase/VFD configurations may make the move to technologies like brushless dc. In short, to meet the requirements of the legislation, OEMs should be prepared to try a number of motor designs and control schemes, evaluating various options to find the one that makes sense design and cost-wise. The same considerations that have always played a role in evaluating motors still apply, but with the added efficiency requirements now in play. It’s not simply a matter of comparing apples to oranges, but rather choosing the most cost-effective and efficient motor. All these factors need to be considered against the expected life of the motor, and the application the motor is driving.

This is even more unsettling for some OEMs, because although they know their own equipment, they may not necessarily be motor experts. For these companies, the motor evaluation process should include a motor-build and inspection analysis (MBIA) before any actual testing. This tear-down analysis of a sample motor ensures that its quality-of-build meets minimum standards for that type of motor and the application in question. If it doesn’t meet the minimum standards, there usually is no benefit to proceed further with that particular motor. In a few cases when there is an overriding reason for the OEM to work with the motor vendor, a testing lab can give a number of recommendations for design and manufacturing modifications to improve the motor.

After the tear-down analysis, sample motors undergo performance characterizations under load tests with high precision dynamometers to validate nameplate specifications and torque requirements of the intended application. When this step is successful, the final step is an endurance test to gauge motor behavior under a rigorous application duty cycle. Here, internal motor defects become evident. Some of these defects may not be readily visible during the tear-down analysis or the short performance tests previously conducted. The endurance tests are designed with the application and the warranty period in mind.

But is this learning curve practical for an OEM who has worked with a motor that provided good energy efficiency and superior operational performance prior to the new standards? The

answer; it does not matter. No matter how well the old motor worked, the legislative requirements must be met. So many OEMs will have to undertake the challenge of moving from a reliable, satisfactory motor to one that is unfamiliar and face a risk of making it work without encountering warranty issues.

The lowdown

OEMs may face a challenge in applying compliant, energy-efficient motors especially for applications that often require manual adjustments anyway. Also, energy-efficient motors could have design specifications that differ from standard motors, but they will still need to handle similar applications without sacrificing performance, reliability, or safety.

These issues will require a thorough review of current equipment design with an eye toward what can be kept and what must change to meet the new motor specifications. During the process, many OEMs may find vendors proactively competing for their business, which in the end may result in business improvements not previously considered.

Definition of common motor types

Induction motors include single- and three-phase versions. Their stators hold copper wires distributed around their circumference in lap or concentric windings while the rotors are cast aluminum in a squirrel-cage configuration. Single-phase induction motors are less efficient than three-phase models and typically are used in equipment that does not use high power or torque.

Direct-current (dc) machines were once widely used because they provide high starting torque and are relatively simple to control. Today, improvements in power electronics have simplified the control of alternating current (ac) motors such that ac is becoming more viable in as a replacement for traditional dc industrial applications. There are several advantages when converting from dc to ac in capital costs and operating costs, however, there are still niche applications for dc motors, especially in transportation such as golf carts, forklifts, and other material handling equipment.

Permanent-magnet motors come in both brushed dc and ac versions. The high cost of magnet material has hampered the wide application of these motors in the past, but now magnetic material costs are dropping and these motors are more attractive in certain applications. Current research focuses on permanent magnet types and their control, particularly in the area of the plug-in hybrid electric vehicle.

Switched-reluctance motors (SRMs) are a relatively new technology that is yet to find widespread use. Electronically controlled, it rivals the induction motor in ruggedness because the rotor has no windings and consists of a stacked-lamination core with salient poles. The down side is noisy operation and a complicated control scheme, but these motors are increasingly applied in heavy off-road equipment and have been proposed in smaller equipment such as washers or automotive systems.

For more information on the progress of the initiative, visit: http://www1.eere.energy.gov/buildings/appliance_standards/commercial/small_electric_motors.html

Filed Under: Green engineering • renewable energy • sustainability, Motion control • motor controls, Motors • ac, Motors • dc

Tell Us What You Think!