Blade Runner 2049 struck a deep chord with science fiction fans. Maybe it’s because there’s so much talk these days of artificial intelligence and automation — some of it doom and gloom, some of it utopian, with every shade of promise and peril in between. Many of us, having witnessed the Internet revolution first hand — and still in awe of the wholesale transformation of commerce, industry, and daily life — find ourselves pondering the shape of the future. How can the current pace of change and expansion be sustainable? Will there be a breaking point? What will it be: cyber (in)security, the death of net neutrality, or intractable bandwidth saturation?

Only one thing is certain: there will never enough bandwidth. Our collectively insatiable need for streaming video, digital music and gaming, social media connectivity, plus all the cool stuff we haven’t even invented yet, will fill up whatever additional capacity we create. The reality is that there will always be buffering on video — we could run fiber everywhere, and we’d still find a way to fill it up with HD, then 4K, then 8K, and whatever comes next.

Just like we need smart traffic signals and smart cars in smart cities to handle the debilitating and dangerous growth of automobile traffic, we need intelligent apps and networks and management platforms to address the unrelenting surge of global Internet traffic. To keep up, global traffic management has to get smarter, even as capacity keeps growing.

Fortunately, we have Big Data metrics, crowd-sourced telemetry, algorithms, and machine learning to save us from breaking the Internet with our binge watching habits. But, as Isaac Asimov pointed out in his story Runaround, robots must be governed. Otherwise, we end up with a rogue operator like HAL, an overlord like Skynet, or (more realistically) the gibberish intelligence of the experimental Facebook chatbots. In the case of the chatbots, the researchers learned a valuable lesson about the importance of guiding and limiting parameters: they had neglected to specify use of recognizable language, so the independent bots invented their own.

In other words, AI is exciting and brimming over with possibilities, but needs guardrails if it is to maximize returns and minimize risks. We want it to work out all the best ways to improve our world (short of realizing that removing the human race could be the most effective pathway to extending the life expectancy of the rest of Nature).

It’s easy to get carried away by grand futuristic visions when we talk about AI. After all, some of our greatest innovators are actively debating the enormous dangers and possibilities. But let’s come back down to earth and talk about how AI can at least make the Internet work for the betterment of viewers and publishers alike, now and in the future.

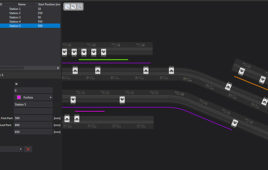

We are already using basic AI to bring more control to the increasingly abstract and complex world of hybrid IT, multi-cloud, and advanced app and content delivery. What we need to focus on now is building better guardrails and establishing meaningful parameters that will reliably get our applications, content, and data where we want them to go without outages, slowdowns, or unexpected costs. Remember, AI doesn’t run in glorious isolation, unerring in the absence of continual course adjustment: this is a common misconception that leads to wasted effort and disappointing or possibly disastrous results. Even Amazon seems to have fallen prey to the set-it-and-forget-it mentality: ask yourself, how often does their shopping algorithm suggest the exact same item you purchased yesterday? Their AI parameters may need periodic adjustment to reliably suggest related or supplementary items instead.

For AI to be practically applied, we have to be sure we understand the intended consequences. This is essential from many perspectives: marketing, operations, finance, compliance, and business strategy. For instance, we almost certainly don’t want automated load balancing to always route traffic for the best user experience possible — that could be prohibitively expensive. Similarly, sometimes we need to route traffic from or through certain geographic regions in order to stay compliant with regulations. And we don’t want to simply send all the traffic to the closest, most available servers when users are already reporting that quality of experience (QoE) there is poor.

When it comes right down to it, the thing that makes global traffic management work is our ability to program the parameters and rules for decision-making — as it were, to build the guardrails that force the right outcomes. And those rules are entirely reliant upon the data that flows in. To get this right, systems need access to a troika of guardrails: real-time comprehensive metrics for server health, user experience health, and business health.

System Guardrails

Real-time systems health checks are the first element of the guardrail troika for intelligent traffic routing. Accurate, low-latency, geographically-dispersed synthetic monitoring answers the essential server availability question reliably and in real-time: is the server up and running at all.

Going beyond ‘On/Off’ confidence, we need to know the current health of those available servers. A system that is working fine right now may be approaching resource limits, and a simple On/Off measurement won’t know this. Without knowing the current state of resource usage, a system can cause so much traffic to flow to this near-capacity resource that it goes down, potentially setting off a cascading effect that takes down other working resources.

Without scriptable load balancing, you have to dedicate significant time to shifting resources around in the event of DDoS attacks, unexpected surges, launches, repairs, etc. — and problems mount quickly if someone takes down a resource for maintenance but forgets to make the proper notifications and preparations ahead of time. Dynamic global server load balancers (GSLBs) use real-time system health checks to detect potential problems, route around them, and send an alert before failure occurs so that you can address the root cause before it gets messy.

Experience Guardrails

The next input to the guardrail troika is Real User Measurements (RUM), which provide information about Internet performance at every step between the client and the clouds, data centers, or CDNs hosting applications and content. Simply put, RUM is the critical measurement of the experience each user is having. As they say, the customer is always right, even when Ops says the server is working just fine. To develop true traffic intelligence, you have to go beyond your own system. This data should be crowd-sourced by collecting metrics from thousands of Autonomous System Numbers, delivering billions of RUM data points each day.

Community-sourced intelligence is necessary to see what’s really happening at both the edges of the network as well as in the big messy pools of users where your own visibility may be limited (e.g. countries with thousands of ISPs like Brazil, Russia, Canada, and Australia). Granular, timely, real user experience data is particularly important at a time when there are so many individual peering agreements and technical relationships, all of which could be the source of unpredictable performance and quality.

Business Guardrails

Together, system and experience data inform intelligent, automated decisions so that traffic is routed to servers that are up and running, demonstrably providing great service to end users, and not in danger of maxing out or failing. As long as everything is up and running and users are happy, we’re at least halfway home.

We’re also at the critical divide where careful planning to avoid unintended consequences comes into play. We absolutely must have the third element of the troika: business guardrails.

After all, we are running businesses. We have to consider more than bandwidth and raw performance: we need to optimize the AI parameters to take care of our bottom line and other obligations as well. If you can’t feed cost and resource usage data into your global load balancer, you won’t get traffic routing decisions that are as good for profit margins as they are for QoE. As happy as your customers may be today, their joy is likely to be short-lived if your business exhausts its capital reserves and resorts to cutting corners.

Beyond cost control, automated intelligence is increasingly being leveraged in business decisions around product life cycle optimization, resource planning, responsible energy use, and cloud vendor management. It’s time to put all your Big Data streams (e.g., software platforms, APM, NGINX, cloud monitoring, SLAs, and CDN APIs) to work producing stronger business results. Third party data, when combined with real-time systems and user measurements, creates boundless possibilities for delivering a powerful decisioning tool that can achieve almost any goal.

Conclusion

Decisions made out of context produce optimal results rarely and only by sheer luck. Most companies have developed their own special blend of business and performance priorities (and anyone who hasn’t, probably should). Automating an added control layer provides comprehensive, up-to-the-minute visibility and control, which helps any Ops team to achieve cloud agility, performance, and scale, while staying in line with business objectives and budget constraints.

Simply find the GSLB with the right decisioning capabilities, as well as the capacity to ingest and use System, Experience, and Business data in real-time, then build the guardrails that optimize your environment for your unique needs.

When it comes to practical applications of AI, global traffic management is a great place to start. We have the data, we have the DevOps expertise, and we are developing the ability to set and fine-tune the parameters. Without it, we might break the Internet. That’s a doomsday scenario we all want to avoid, even those of us who love the darkest of dystopian science fiction.

About Josh Gray:

Josh Gray has worked as both a leader in various startups as well as at large enterprise settings such as Microsoft. At Microsoft he was awarded multiple patents. As VP of Engineering for Home Comfort Zone his team designed and developed systems that were featured in Popular Science, HGTV, Ask this Old House and won #1 Cool product at introduction at the Pacific Coast Builders Show. Josh has been a part of many other startups and built on his success by becoming an Angel Investor in the Portland Community. Josh continues his run of success as Chief Architect at Cedexis.

Filed Under: M2M (machine to machine)