Researchers at Massachusetts Institute of Technology (MIT) have developed a “psychopathic” artificial intelligence (AI) by feeding the technology data using images pulled from the online forum Reddit. Named after Alfred Hitchcock’s iconic antagonist Norman Bates, the project tests how data fed into an algorithm affects its outlook (aka its bias tendencies). In this case, Norman was exposed to some of the “darkest elements of the web,”which involved subjecting the software to images of people dying in gruesome ways from a Reddit subgroup, to determine how these photos affect the software.

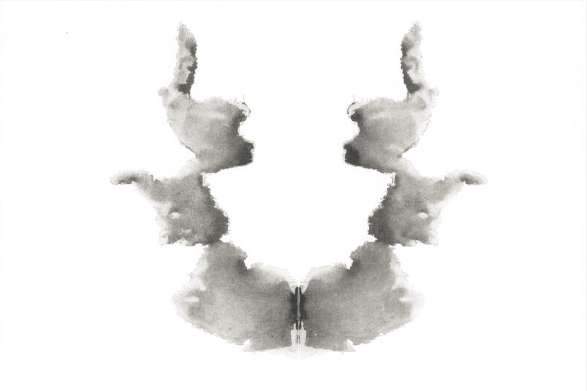

Norman is a specific AI program that can “look at” and “understand” images, along with describing what it sees in writing. After Norman was trained on these graphic images, the software performed the Rorschach test—a series of inkblots psychologists use for analyzing the mental health and emotional state of their patients. Norman’s interpretations to the inkblots were then compared to responses from a second brand of AI software that was trained on more family-friendly images like birds, cats, and regular people.

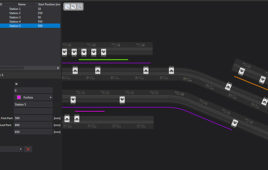

A standard AI thought this red and black inkblot represented “A couple of people standing next to each other.” Norman thought it was “Man jumps from floor window”. (Image Credit: MIT)

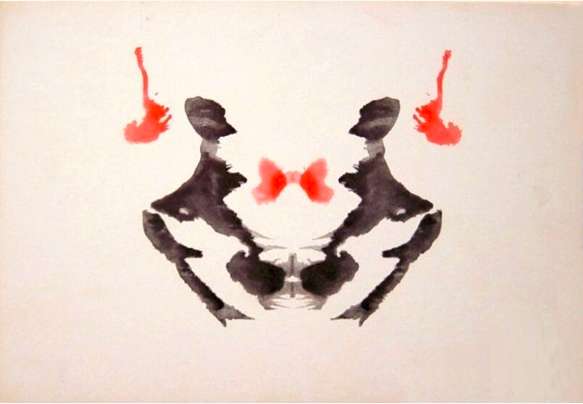

Standard AI interpreted the inkblot as “A black and white photo of a baseball glove”, while Norman described it as “Man is murdered by machine gun in daylight” (Image Credit: MIT)

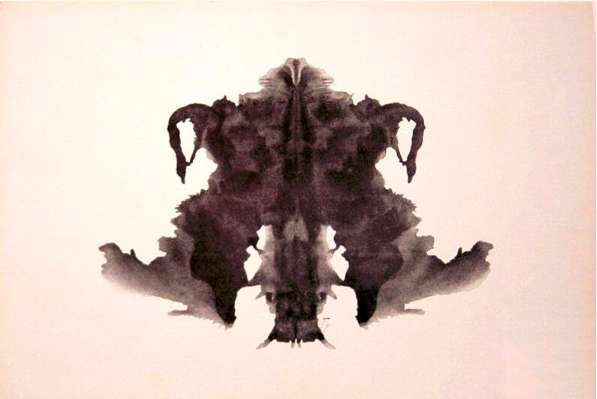

One AI thought this was “A black and white photo of a small bird.” Norman saw “Man gets pulled into dough machine.” (Image Credit: MIT)

The findings ultimately show how much data really does matter more than the algorithm. Norman was significantly affected from its prolonged exposure to these dark, disturbing images from Reddit. According to the MIT researchers, these findings help represent a case study on the dangerous implications of AI going wrong when biased data is applied to machine learning algorithms.

The researchers went on to mention how this trend is evident not only for AI exhibiting psychopathic tendencies, but for other algorithms suspected of exhibiting bias and prejudice. Studies show for example that (whether intentionally or not) AI can pick up on undesirable traits like human racism and sexism. One example to back this claim is Microsoft’s chatbox Tay, which had to be shut down after it started saying hateful phrases and terms.

Filed Under: AI • machine learning, M2M (machine to machine)