Project Soli aims to turn hands and fingers into virtual dials and touchpads. (All image credit: Google ATAP)

Google’s Advanced Technology and Projects (ATAP) group is tasked with developing new mobile computing and communication technology.

The projects at ATAP exist at the intersection of ambitious science and a precise, use-inspired application. One such project plans to turn the human hand into a versatile user interface.

Project Soli is a gesture radar small enough to fit in a wearable device that captures the motions of fingers and hands at resolutions and speeds of up to 10,000 frames per second – essentially turning a hand into a virtual dial or touchpad.

“Our hands are very fast and precise instruments, but we’re still not able to capture this sensitivity and accuracy in our current user interfaces,” explains Ivan Poupyrev, Project Soli technical project lead. “There’s a natural vocabulary of hand movements we’ve learned from using familiar tools, like knobs, dials, sliders, and even smartphone touchscreens – and we wanted to explore using these fast and precise motions in touchless gesture control of various other devices.”

Improving Gesture-Sensing Technology

Project Soli’s ultimate goal is to take the human hand’s finesse and apply it to the virtual world. In order to turn hands into complete, self-contained interface controls, ATAP needed a sensor that could capture sub-millimeter motions of overlapping fingers in 3D space.

“Radar fit all of these requirements, but it was a little … big,” says Poupyrev. “With Project Soli, we created the first gesture radar small enough to fit into a wearable device.”

Fundamentally, the team has created a new interaction sensor category, which uses 60 GHz electromagnetic waves that can capture finger motions at resolutions and speeds that, until now, have been unattainable.

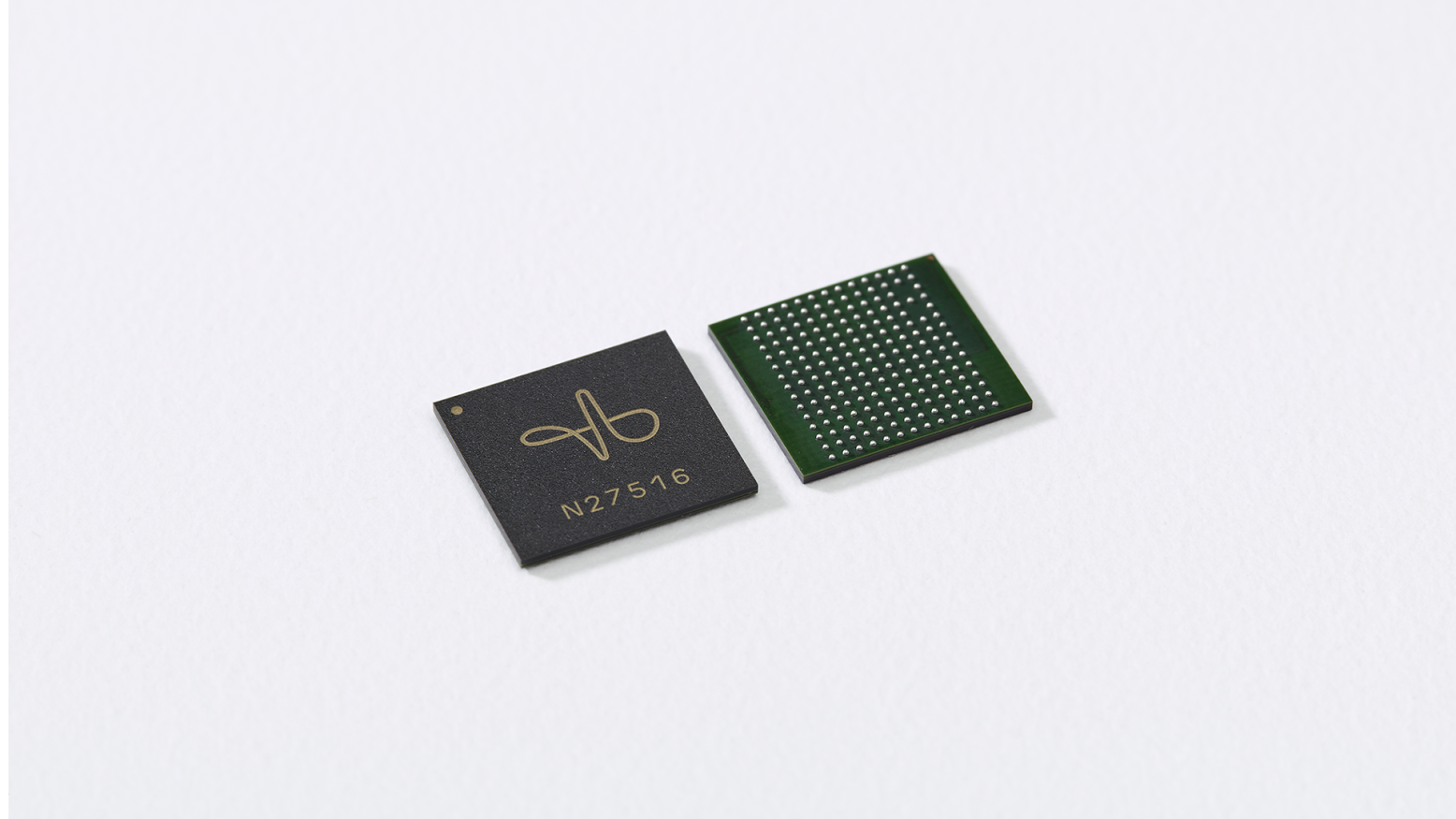

Project Soli’s radar chip can capture hand motions at resolutions and speeds up to 10,000 frames per second.

ATAP has been able to collect radar data at 10,000 frames per second between distances of 5 mm and 100 cm, which allows for incredibly precise and responsive interactions. The gesture radar sensor is also able to run on low-power ARM Cortex-A7 chips that are commonly used in smart watches.

Traditional radar bounces a signal from an object and provides a single return. Using this technique to capture complex moving shapes (e.g. the human hand) at close distances would require scanning the signal in X and Y directions, which would have been very challenging to recreate at such a small scale, from a hardware and computation perspective.

“To capture the complexity of hand movements at close range, we illuminate the whole hand with a broad beam, using a modulated radio signal at a high frequency, and measure return signals reflected by the hand,” explains Poupyrev. “By using complex signal processing techniques we can extract signal components that characterize the specific properties of motions of the human hand.”

The extracted characteristics are then classified using machine learning algorithms, allowing the researchers to recognize complex interactions, hand gestures, and micro-motions.

An Ambitious Prototype

The team built its prototype – a 5 x 5 mm piece of silicon packaged with antennas into a 12 x 12 mm IC – in just 10 months. “We’re working on finalizing the development board and software API that we’re planning to release to developers later this year,” says Poupyrev.

All projects at ATAP are slated to last for two years, and because Project Soli is only about one year into development, there is still a lot of information the researchers are unable to share. That being said, the team has already overcome multiple obstacles.

“This is an ambitious project, and there are many aspects of the technology that are difficult. One example is shrinking a radar into a 5 x 5 mm piece of silicon,” says Poupyrev. “Developing a software pipeline for gesture tracking using an RF signal was also very challenging because it had not been done before; therefore, we had to invent gesture tracking algorithms from scratch.”

The gesture sensing technology can also work in any lighting conditions as well as complete darkness. “[It] can even be embedded into devices and work through materials such as glass,” adds Poupyrev. “It’s very small and potentially inexpensive.”

Project Soli is still in the development phase, making it too early to speculate when the gesture technology will enter the market. However, Project Soli demonstrates new gesture sensor technologies for wearables, mobile devices, as well as the Internet of Things.

This article originally appeared in the September 2015 print edition of PD&D.

Filed Under: Rapid prototyping