I once wrote a play, during which a character calls out her husband during a heated argument for thinking “Tom Hanks is overrated”—my belief being, of course, that quite the opposite is true. (If you disagree, you should probably just “castaway” this article.)

…

Do I still have you?

Awesome.

The Academy Award-winning actor has appeared in dozens of films, playing characters all across the human spectrum. At the same time, I’d wager people across America weren’t peeling their feet off the sticky theater floors, thinking, “Gee, who was that actor that played Forrest Gump? Wait, shut your mouth—that was Tom Hanks!?”

The man, like any celebrity, is universally recognizable.

That’s why the University of Washington (UW) decided to use him as a case study in computer science: “Tom Hanks has appeared in many acting roles over the years, playing young and old, smart and simple. Yet we always recognize him as Tom Hanks.”

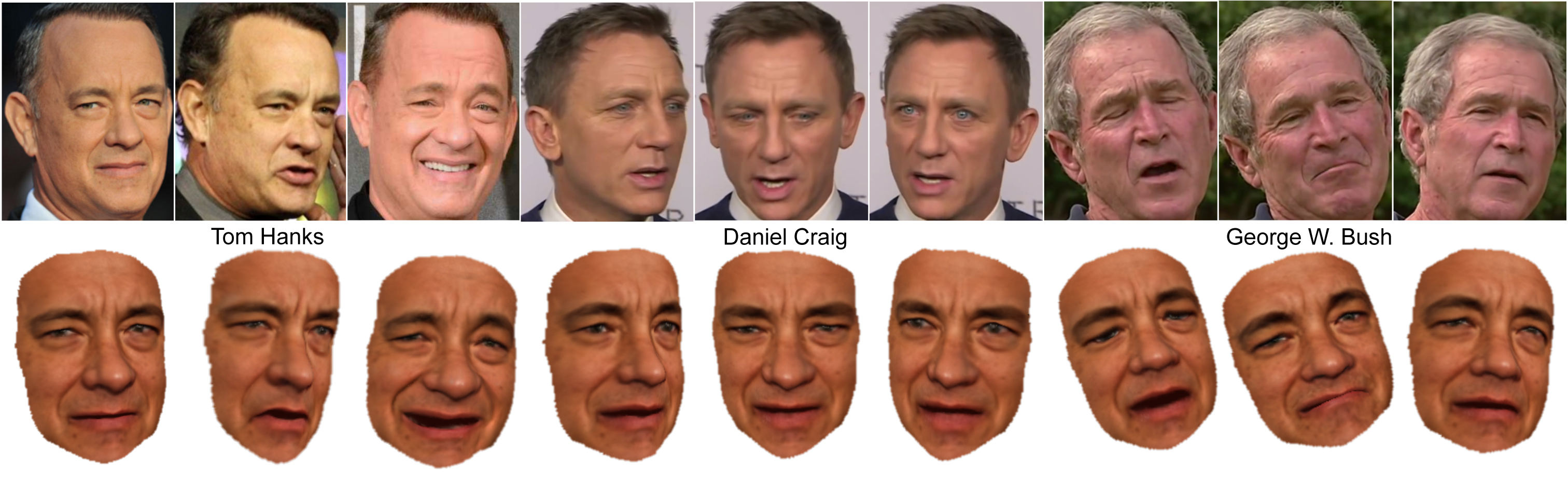

Using over 200 Internet photos and videos as visual data (a process known as learning “in the wild”), UW researchers succeeded in reconstructing a 3D model of Mr. Hanks, demonstrating that it’s possible for a machine to capture the “persona” of an individual. In doing so, the researchers can make the model recite speech the actor never actually performed.

The technology that makes this poser Hanks possible includes advances in 3D face reconstruction, tracking, alignment, multi-texture modeling, and puppeteering that a UW research group has been working on over the last five years.

And the fun doesn’t end there.

By manipulating lighting conditions across different photographs, the researchers are able to transfer one person’s “persona” (physical appearance and behavior) onto another person’s face. A digital model of George W. Bush can, for example, be generated using a video of President Barack Obama—yet still retain everything that makes it look and sound like our former, Lone Star president.

(University of Washington)

“How do you map one person’s performance onto someone else’s face without losing their identity?” said co-author Steve Seitz, UW professor of computer science and engineering. “That’s one of the more interesting aspects of this work.”

Advances in this technology are bringing UW researchers closer to achieving interactive, three-dimensional digital “personas” that can be generated from family photo albums or other historic visuals. The approach will also make it easier for movie studios to “capture” human movements that will later be translated to digital characters, as well as for people to generate avatars for gaming and other virtual environments.

“Imagine being able to have a conversation with anyone you can’t actually get to meet in person—LeBron James, Barack Obama, Charlie Chaplin—and interact with them,” said Seitz. “We’re trying to get there through a series of research steps. One of the true tests is can you have them say things that they didn’t say but it still feels like them? This paper is demonstrating that ability.”

Results will be presented at the International Conference on Computer Vision in Chile on December 16th.

Filed Under: M2M (machine to machine)