While 2016 was the year of the GPU for a number of reasons, the truth of the matter is that outside of some core disciplines (deep learning, virtual reality, autonomous vehicles) the use of GPUs for general purpose computing applications is still in its early stages, despite its exceptional growth over the past 12 months.

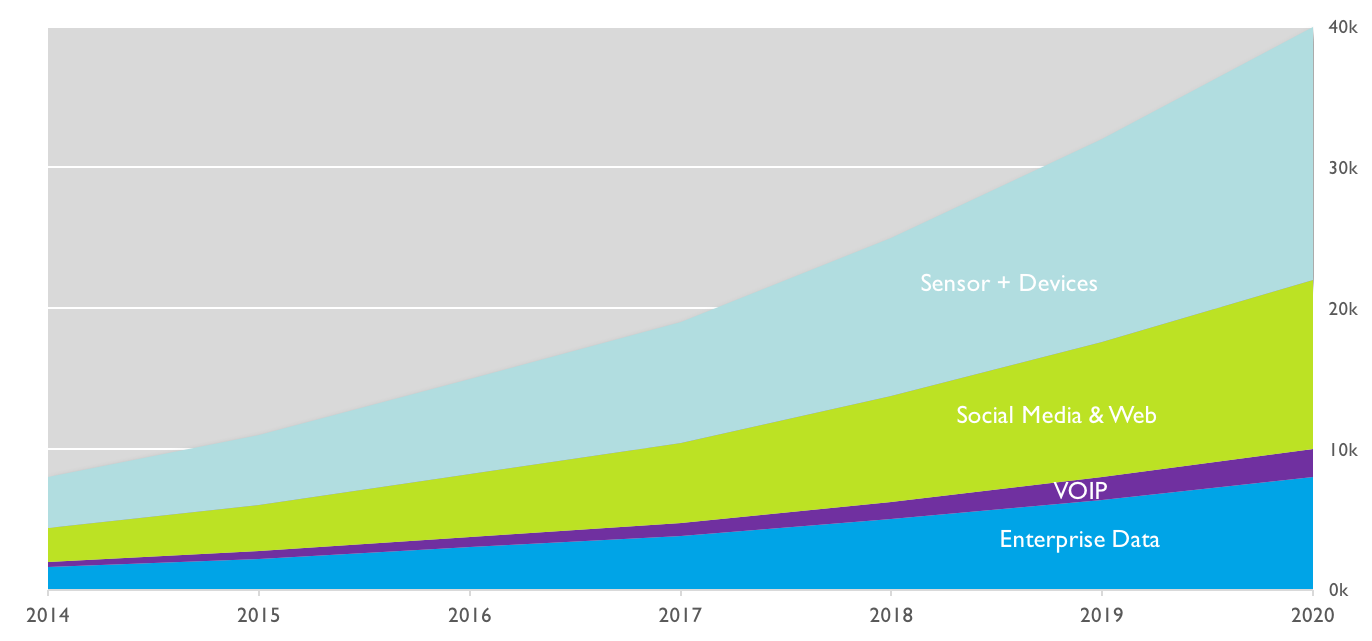

Leading wireless companies like Verizon, Orange, and SK Telecom are on the forefront of the revolution — driven by necessity rather than religion. The reasons are simple. Leading wireless providers now create more data in a day than their legacy CPU-based analytics can process in a week, and that figure will look minuscule when vehicles and other devices start to broadcast their every move.

Analyzing and monetizing this data, more than 5G or even security, represents the defining challenge for the wireless industry. However, most wireless providers are failing to meet the challenge.

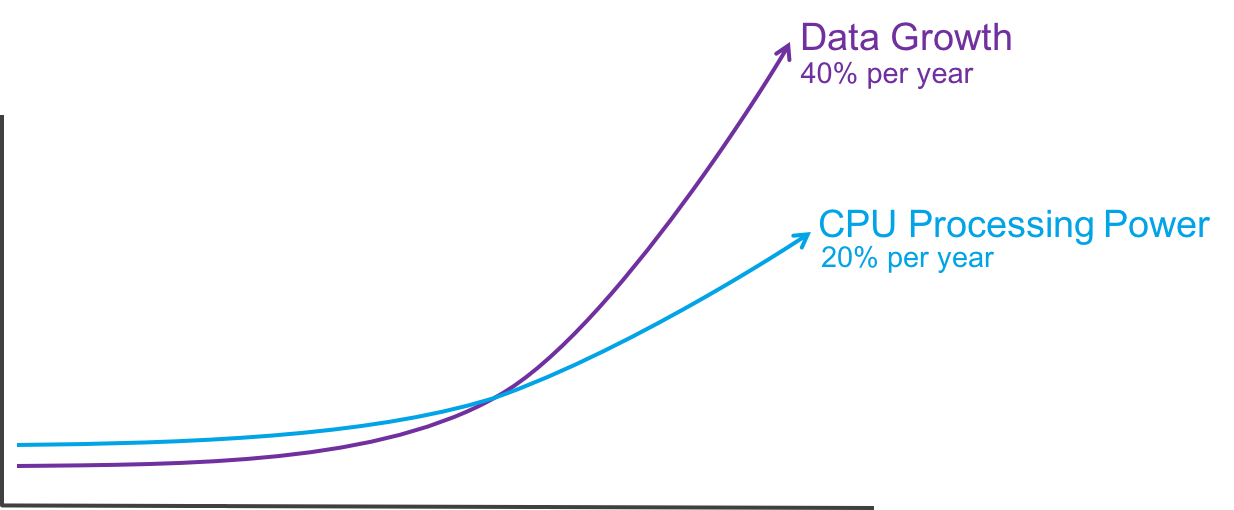

At the heart of the problem are two key forces and one rule of physics.

Force One

Wireless companies collect exponentially more data today than they did just a few years ago. It’s not just data travelling over their network, but data about their network, about the devices on their network, and the entire ecosystem that surrounds their network.

Force Two

They now store everything. The price to store all of this data is effectively zero and so everything that is collected is stored. I was talking to a former colleague about the most popular speed test checker from the broadband boom days and where that data might be located. He informed me that they didn’t keep anything, and it was deemed too expensive. That wouldn’t happen today.

Rule of Physics

Due to heat, power, and transistor size the improvement in CPUs processing power has essentially stalled. The net effect is that for optimized code, CPUs are seeing an approximately 20 percent improvement per year (measured in raw FLOPS).

So data is growing at 40 percent a year. Storage prices are plummeting at a rate that keeping everything is nearly free and CPUs are growing at 20 percent a year.

The equation doesn’t balance. CPUs have lost to data and this state is likely permanent.

Most wireless providers already know this, and have for 24 months. They have adopted a series of behaviors to get around the problem — none of which work. Let’s take a quick look at them:

1) You can wait. If you wait, you only ask the questions that don’t make you wait or you only dig deep enough to get some directional advice and you certainly don’t explore.

2) You can downsample. As opposed to looking at all the data, you look at slivers: a neighborhood, a device type, an application. Whatever is small enough such that you don’t have to wait.

3) You index or pre-aggregate. This is time-consuming and complex, but it certainly helps. Unfortunately it is difficult to manage and if your data changes … well, you have to start all over again.

4) You scale out. This is the best known, least practical method. Adding another 100 nodes of Impala isn’t the answer. It doesn’t scale linearly, it comes with a host of hidden costs such as licensing, power, cooling, and administrative complexity.

So What Does This Have to Do with GPUs?

Well, GPUs are constructed differently than CPUs. They are not nearly as versatile, but unlike CPUs they actually boast thousands of cores — which is particularly important when it comes to dealing with large datasets. Since GPUs are single-mindedly designed around maximizing parallelism, the transistors that Moore’s Law grants chipmakers with every process shrink is translated directly into more cores, meaning GPUs are increasing their processing power by at least 40 percent per year, allowing them to keep pace with the growing deluge of data.

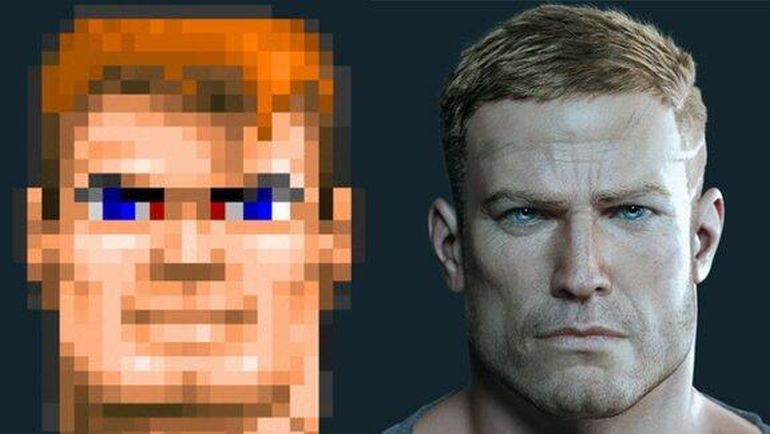

The reason GPUs exist at all is that engineers recognized in the nineties that rendering polygons to a screen was a fundamentally parallelizable problem — the color of each pixel could be computed independently of its neighbors. GPUs evolved as more efficient alternative to CPUs to render everything from zombies to racecars to a user’s screen.

GPUs solved the problem that CPUs couldn’t by making a chip with thousands of less sophisticated cores. With the right programming, these cores could perform the complex and massive number of mathematical calculations required to drive realistic gaming experiences.

GPUs didn’t just add cores. Architects realized that the increased processing power of GPUs needed to be matched by faster memory, leading them to drive development of much higher bandwidth variants of RAM. GPUs now boast an order-of-magnitude greater memory bandwidth than CPUs, ensuring that the cards scream both at reading data and processing it.

With this use case, GPUs began to take a foothold. What happened next wasn’t in the plan but has massive implications for wireless providers.

Enter the Mad Computer Scientist

Turns out there is a pretty big crossover between gamers and computer scientists. Computer scientists realized that the ability to execute complex mathematical calculations across all of these cores had applications in high-performance computing. As a result, seemingly overnight, every supercomputer on the planet was running on GPUs, thereby lighting the match on the entire deep learning/autonomous vehicle/AI explosion.

Think about the big challenges facing wireless operators, which are the ones you don’t even consider due to their size or complexity but that could benefit from deeper analysis:

1) Looking at the entirety of the network to prioritize build out, locate soft spots, or identify anomalous behavior.

2) Segmenting customer intelligence in real-time for marketing or advertising.

3) Executing geo-analytics in real time and comparing that with historical data including the growing telemetry data.

4) Segment device types and application behavior across the entire network, again in real time.

Just because they are out of reach of CPU-grade compute does not mean they are out of reach for GPU-grade compute.

The ROI + Productivity Equations

So we have made the technical case for GPUs, but what about the financial case? Well, as it turns out the economics are very much in favor of GPUs as well, driven primarily by the computing power we just outlined.

A full GPU server will be more expensive than a fully loaded CPU server. While it is not apples to apples comparable, a full 8 GPU setup can run up to $60K whereas a moderately loaded CPU setup will run around $9K.

Prices, of course, can vary depending on how much RAM and SSD are specified.

While it may seem like a massive difference in CAPEX, it is important to note the following. It would take 250 of those servers to match the compute power of a GPU server. When you take that into consideration, the GPU server is about $2.1 million less expensive. But you have to network all those CPU machines at a cost of $1K per, so that is another $125K. Managing 250 machines is no easy task either. Call that 20 percent of the total another $200K per year.

Something like Nvidia’s DGX-1 will fit into a 4RU space. Finding room for your 250 CPU servers is a little harder and a bit more expensive. Let’s call that another $30K per year.

Finally, there is heating and cooling. This is material and represents another incremental $250K per year.

All told, to support the “cheaper” CPU option is likely to cost another $2.5M in the first year, not to mention the environmental damage done.

Closing Thoughts

This article was designed to explain why wireless providers need to be thinking about GPU powered analytics and other applications. It really resolves itself to the following:

1) Wireless providers are producing more and more data each passing day.

2) Storing that data is effectively free, so we store everything.

3) These massive working sets hold incredible insights, but CPU-powered analytics cannot get to them.

4) GPUs offer a path forward for speed at scale when it comes to wireless analytics.

5) The economics (on prem or in the cloud) grossly favor this new compute paradigm.

So take a moment to think about that one report that the BI team tell you takes three to five hours to run — if it completes at all. Or think about the one dashboard that you like but that turns into bunch of spinning pinwheels if you click on anything on the screen. Finally, think of that awesome idea you had last quarter that started with, “Wouldn’t it be great if we could …” and ended with your analytics lead say, “Yes, yes it would be great, but our infrastructure can’t handle it.”

Todd Mostak is the founder and CEO of MapD, which offers GPU-tuned analytics and visualization applications for the enterprise. Mostak conceived of the idea of using GPUs to accelerate the extraction of insights from large datasets while conducting his Harvard Graduate research on the role of Twitter in the Arab Spring. Frustrated by the capabilities of conventional technologies to allow for the interactive exploration of these multi-million row datasets, he built one of the first GPU-based databases.

Filed Under: Virtual reality, Infrastructure