SPONSORED CONTENT

By Derek Thomas, Vice President of Sales & Marketing, Machine Automation Solutions at Emerson

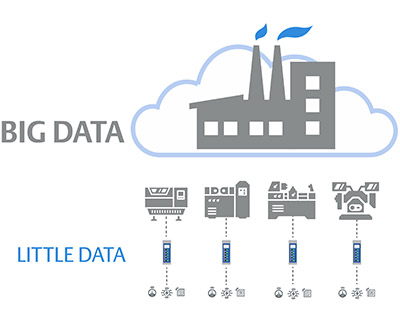

This article is the second in a four-part series exploring three new but related concepts for deriving value from big data: little data, edge processing, and embedded connectivity. The first column provided an overview of all three topics, and this column will focus on little data and how it differs from big data.

This article is the second in a four-part series exploring three new but related concepts for deriving value from big data: little data, edge processing, and embedded connectivity. The first column provided an overview of all three topics, and this column will focus on little data and how it differs from big data.

As discussed in the first column, many companies are tempted to tackle the big data issue in one fell swoop with mega-scale projects to ambitiously gather all the data from various sources into a data lake. This first step alone presents an enormous challenge, but still provides no value as the data must be cleansed to remove anomalies. Only when these tasks are complete can analysis begin to create insights and improve outcomes.

Huge and complex big data projects cause many companies to fail or abandon efforts before results are attained. A better path for most manufacturers is to start small with a little data approach to greatly simplify and speed implementation, with positive results generated in days instead of the years typically required for a big data project.

The most successful little data projects aren’t driven from the top down but instead start at the plant, line or even machine level. Personnel at these levels deal with and know the daily operational issues, but decisions around improvements are often made based on single-point observations or “gut feel” because the data being created by the machine goes to waste. Answers to these types of problems might reside in the data being collected and stored, but it is often not presented in a meaningful context or is diluted by a larger data pool.

Therefore, the first step in any little data project is to identify known pains or problems causing operational issues, such as poor product quality and downtime. This step is often skipped or over-generalized in IoT projects as companies and stakeholders rush to implement a technology or “the newest big thing”. But without clear and upfront objectives, the chance of success is low, especially in companies just beginning their digital transformation journey.

When one starts with small, everyday pains and problems, plant personnel can quickly prioritize and address each issue one at a time by first determining what data is locally available, then suggesting the addition of any required new sensing points. This helps drive early success to fuel organizational enthusiasm, as well as providing savings and increased revenue for funding subsequent little data projects. This is the power of the little data approach, now made more feasible by moving computing power to the edge.

For example, one end user was experiencing performance issues—including excessive downtime, rate loss and poor quality—with a new machine and couldn’t identify the root cause. Existing data from the machine, along with data from new sensors connected to an edge controller, were used to monitor compressed air flow, motor vibration, actuator position and pressure and vacuum.

The data from these new sensors did not improve machine control and operation by itself, but it was instrumental in providing insight to the operator via a local operator interface, and to the OEM via secure transfer to the cloud. This provided both parties with near real-time access to the same data, enabling collaborative problem solving.

This improved visibility into a machine’s operation allowed the end user to use this additional data to initiate procedural and tuning changes to machine operation, thus increasing productivity and improving quality. The OEM was able to provide early diagnosis of problems before they escalated, reducing downtime and maintenance expenses.

Another example of this concept in practice can be found within the Emerson’s machine automation solutions business, with headquarters and a world-class manufacturing located in Charlottesville, VA.

Plant personnel progressively and continuously look for improvement opportunities. The actions they undertake are not dependent on a corporate big data directive but are instead based on known needs for improving operations. This bottom up, little data approach ensures effectiveness because each proposed change is suggested by those closest to the problem, enabling rapid recognition followed by quick implementation of solutions.

Big data projects are highly complex, take years to complete, and require substantial investments. The technologies required to implement these projects often become the area of focus, instead of the operational problems they were meant to solve. Because results aren’t realized for such a long period of time, it at all, apathy often sets in, discouraging efforts.

Little data projects are much simpler, take only days to complete, and require minimal investments. Operational problems are the focus of these types of projects, with the second step selection of the correct hardware and software at the edge, which will be the focus of the next article in this series.

Sponsored content by Emerson

Filed Under: DIGITAL TRANSFORMATION (DX), SPONSORED CONTENT