Almost every object has been designed to work with the human hand, which is why Microsoft is researching the human hand gestures. In order to create more intuitive computer interfaces, they’re working on projects that deal with hand tracking, haptic feedback, and gesture input. Let’s dig in and see what they’re working on.

Handpose

Handpose is a research project underway at Microsoft’s Cambridge lab. The focus of Handpose is to track all the possible configurations of a human hand using a Kinect sensor, which tracks movements in real-time and displays virtual versions that mimic everything real hands do. The tool is precise and allows users to operate digital switches and dials with the dexterity you’d expect of physical hands, and can be run on a consumer device, like a tablet.

Haptic Feedback

A Microsoft team at Redmond, Washington, is experimenting with something more hands-on. A key aspect comes through the sense of touch, as in, the sensation that the digital hands are really your own. They need to feel like they’re yours. The team is working on a system that is able to recognize that a physical button, not actually connected to anything, has been pushed, all by reading the movement of the hand.

By using a retargeting system, multiple, context-sensitive commands can be laid over the top in the virtual world. So, just because it doesn’t actually do anything in the real-world, it does something in the virtual world. This makes a big difference in the VR world, and is realistic, because you don’t need 75 buttons to do 75 things. Instead, a small real-world panel of say 5 buttons, is enough to interact with a complex wall of virtual knobs and sliders. The dumb physical actual buttons and dials help make virtual interfaces feel more real, and because they aren’t actually connected to anything, you don’t need a lot.

Project Prague

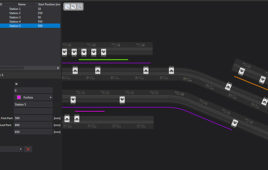

The third research project, dubbed Project Prague, aims to enable software developers to incorporate hand gestures for various functions in their apps and programs. Gesturing the turn of a key could lock a computer, or pretending to hang up a phone might end a Skype call. Researchers built the system by feeding millions of hand poses into a machine learning algorithm to train it to recognize specific gestures, and uses hundreds of micro-artificial intelligence units to build a complete picture of a user’s hand positions, as well as their intent. It scans the hands using a consumer-level 3D camera.

The team believes this technology will have applications for everyday work tasks, such as browsing the web or creating and giving presentations.

Filed Under: M2M (machine to machine)