Simulation has played a key role in the development of Drive.ai’s autonomous vehicles. (Credit: Drive.ai)

Real roads have drunk drivers, downed power lines, potholes and many other obstacles. The streets of simulation software, however, are infinitely more forgiving. In this digital proving ground, engineers can safely replay millions of scenarios designed to help them better program the software that operates a self-driving vehicle.

Regarding the simulations used to prepare self-driving vehicles for the road, the question is how good is good enough? How transferable are simulated results to the real world? California-based startup Drive.ai is building the “brain” that powers self-driving vehicles. But before that brain takes a self-driving vehicle to the streets, it has a separate simulator that takes things to the screen.

To get Drive.ai’s deep learning road-ready without the risks and overhead costs of live vehicles, a simulator handled the first one million miles. Drive.ai modifies the simulated world by switching traffic lights, vehicle placement, and creating algorithmically-controlled dynamic agents like pedestrians or cars to see how its AI responds.

Drive.ai was founded in 2015 by former graduate students in the Artificial Intelligence Lab at Stanford University. In just three years, the deep learning company has gone from post-graduate dream project to an adaptive, scalable, self-driving vehicle system. And not a moment too soon. With Waymo’s self-driving vehicle pilot launch last summer and recent partnership with Walmart, the proverbial race is on.

Fueled by a $77 million funding in four total rounds, Drive.ai has progressed fast enough to launch two pilot programs in Texas this summer. A fleet of Drive.ai’s deep learning-enabled Nissan NV200’s will drive themselves geofenced portion of Frisco and Arlington.

Simulation has played a key role for many companies developing autonomous vehicles, including Waymo, which often touts the number of simulated miles its fleet has driven. Here’s how simulation helped Drive.ai ramp up so quickly.

Simulation Vital Early on

Drive.ai VP of Engineering Kah Seng Tay explained that simulation is most critical in the early stages of development.

“If you are trying to get to a certain level of safety for autonomous driving in the real world, there isn’t much time or resources and scenarios to validate a solution before you have it out in the real world. We have to make sure we simulate the world, test a lot of software and all the edge cases before we try to deploy these cars on the road.”

Edge cases, the out-of-the-ordinary scenarios that AI bungles in live tests, are critical. Even the worst human drivers won’t mistake a bicycle painted on the back of a truck for an actual cyclist. But AI might. This potential AI confusion is why any simulator relies heavily on real-world data collected by a car’s sensors. The AI is not only driving the car, it’s reporting on itself and providing detailed scenarios for engineers to upload into a simulator.

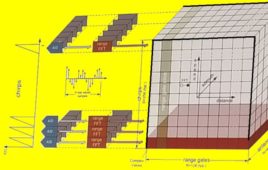

When Drive.ai started, the simulator primarily focused on path-planning concerns. How would the car react to other agents on the road? How does it travel through the road network? As such, Drive.ai engineers simulated what they knew of the mapped world and simulated other agents in that world, making sure its software was able to avoid obstacles and collisions.

Drive.ai credits using open-source stalwart ROS for fleshing out the early concept for its self-driving vehicles. “We’d like to acknowledge those we’ve built on top of; like many self-driving companies, our company’s roots were in ROS-based software development,” the company said.

Top-down view of Drive.ai’s simulator. (Credit: Drive.ai)

Drive.ai DPS Middleware

The company’s AI analyzes data from vehicle-mounted sensors and cameras. This data acts as fuel to make the simulator more accurate. The simulator gains a better understanding of what the car is going to be seeing, instead of just a thematic representation of objects on the road. “We now can simulate our perception outputs effectively, in addition to motion-planning. And we can decide which area of focus we want to test and simulate in,” said Tay.

Drive.ai’s current capabilities are built on its own middleware system named Drive.ai pubsub or DPS. The DPS system logs data like sensor input and generated outputs. These file formats can then be parked and replayed over time. This functionality was critical for accurate real-world simulation. DPS is deterministic and robust enough to create a virtual world realistic enough to be useful.

“We care a lot about the ability to factually replay all these message logs in time, in a synchronized fashion, and in a deterministic way — such that we can recreate what happened in the real world,” said Tay.

Tay further commented on the ramifications of early errors.

“Imagine if you thought that what happened in the real world was different than what the logs collected and analyzed — and you were developing towards those parameters. Then when you deploy in the real world, things could be drastically different from what you thought you had developed. So, we cared a lot about this reliability. We couldn’t lose any of these messages. It needed to be time synchronized and perfect in a deterministic replay. And we could then use it for simulation.”

Drive.ai simulation software navigates a self-driving car around a turnabout.

Testing an Edge Case

Again, one of a simulator’s best tools are data collected and analyzed by self-driving AI software in live tests. In one example, Tay relates the case of a Drive.ai vehicle maneuvering around a delivery truck. The self-driving car was able to nudge past the parked truck, prompting Tay’s team to create other similar obstacles in simulation but with additional conditions.

“We tweaked some perimeters and pushed out the truck by a couple of inches. With some of the dimensions — we tilted the angle of the truck, increased its length and width and at some point, the truck just got too wide. There was no longer room for us to pass by the truck, so we had to stop there …”

This being a delivery truck that wasn’t going to move, Tay said that he thinks even a human driver would have likely sought an alternate path; there was no way through. The Drive.ai team wanted to see what their car would do in that situation. They wanted to see if it would try to force its way through and collide or whether it would knowingly stop ahead of time and recognize that it needs to find another route. By incrementally adding complications to scenarios in a simulator, engineers can determine the limits of navigable spaces in the real world.

In a simulation environment, engineers get to take real-world data collected from the self-driving software and then apply it within a simulated world without restrictions; in simulation, engineers have infinite scalability software and can speed up scenarios and predictions to test the most critical cases. There’s no way to do that in the real world.

“That’s the pivotal point. Realistically, more pragmatically, I’d say it’s not going to be perfect, but do think we’ll always continuously invest in simulations,” Tay said.

Limits of Simulation

Simulation can’t handle everything. Live testing continues to find edge cases that can’t be predicted. These cases can only be experienced, recorded, and then uploaded as a new event in a simulator’s vast scenario library. This, of course, takes time.

A fundamental question for companies is “how much time, money, and human capital should we dedicate to a simulation program?” Even the most finely-tuned simulation has fidelity limits. At what point should a company start allocating resources to other departments and what does that balance look like? This push/pull is famliar throughout companies that rely on simulation for R&D.

“It’s not particular to Drive.ai or any other industry. With simulation, there is this challenge of: ‘How much time do you spend investing in making a better simulator versus how much time do you spend doing the real development work that you’re trying to achieve? For us, it’s getting self-driving cars on the road. With limited engineering time, there’s always a trade-off between how much you want to invest in making this simulator realistic – we call it ‘high-fidelity’ – versus, ‘let’s spend actual time doing development like our self-driving algorithms.”

Tay said many companies can achieve suitable simulation using less than 50% of their engineering time. While Drive.ai has developers working on algorithms for new edge cases, it’s not the company’s sole focus.

For Drive.ai, only a small fraction of its engineering resources go to simulation. The rest is used for protection and motion planning, which Tay considers “the actual pioneering work of self-driving cars.”

Drive.ai has logged enough simulated miles to feel confident, but it is not ready to rest on its DPS laurels. Tay said Drive.ai still plans to keep developing its simulator in spite of inherent limitations and other engineering resource needs.

Filed Under: Automotive, The Robot Report

Tell Us What You Think!